Only showing posts in the "Software Development" category

Working Around Performance Issues in LINQ to SQL and SQL CE 3.5

April 25, 2011 5:17 PM by Daniel Chambers (last modified on May 02, 2011 2:26 PM)

Recently I’ve been optimising LINQ to SQL queries running against an SQL CE 3.5 database in order to stop them taking over 5 minutes to execute and bringing them down to only a few seconds. In this post I’m going to go into two of the biggest offenders I’ve seen so far in terms of killing query performance. Credit must go to my fellow Readifarian Colin Savage with whom I worked to discover and find solutions for the offending LINQ query expressions.

In this post, I’m going to be using the following demonstration LINQ to SQL classes. Note that these are just demo classes and aren’t recommended practice or even fully fleshed out. Note that Game.DeveloperID has an index against it in the database.

[Table(Name = "Developer")]

public class Developer

{

[Column(DbType = "int NOT NULL IDENTITY", IsPrimaryKey=true, IsDbGenerated=true)]

public int ID { get; set; }

[Column(DbType = "nvarchar(50) NOT NULL")]

public string Name { get; set; }

}

[Table(Name = "Game")]

public class Game

{

[Column(DbType = "int NOT NULL IDENTITY", IsPrimaryKey=true, IsDbGenerated=true)]

public int ID { get; set; }

[Column(DbType = "nvarchar(50) NOT NULL")]

public string Name { get; set; }

[Column(DbType = "int NOT NULL")]

public int DeveloperID { get; set; }

[Column(DbType = "int NOT NULL")]

public int Ordinal { get; set; }

}

Outer Joining and Null Testing Entities

The first performance killer Colin and I ran into is where you’re testing whether an outer joined entity is null. Here’s a query that includes the performance killer expression:

var query = from d in context.GetTable<Developer>()

join g in context.GetTable<Game>()

on d.ID equals g.DeveloperID into tempG

from g in tempG.DefaultIfEmpty()

select new

{

d,

//Inefficient

Name = g != null ? g.Name : String.Empty,

};

The above query is doing a left outer join of Game against Developer, and in the case that the developer doesn’t have any games, it’s setting the Name property of the anonymous projection object to String.Empty. Seems like a pretty reasonable query, right?

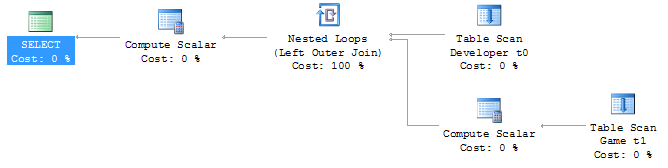

Wrong. In SQL CE, this is the SQL generated and the query plan created:

SELECT [t0].[ID], [t0].[Name],

(CASE

WHEN [t2].[test] IS NOT NULL THEN [t2].[Name]

ELSE CONVERT(NVarChar(50),'')

END) AS [Name2]

FROM [Developer] AS [t0]

LEFT OUTER JOIN (

SELECT 1 AS [test], [t1].[Name], [t1].[DeveloperID]

FROM [Game] AS [t1]

) AS [t2] ON [t0].[ID] = [t2].[DeveloperID]

Inefficient join between two table scans on SQL CE 3.5

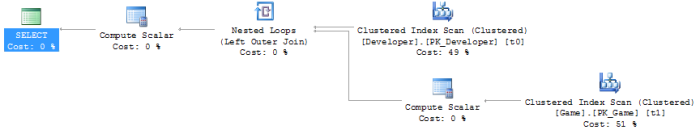

The problem is that the “SELECT 1 AS [test]” subquery in the SQL is causing SQL CE to do a join between two table scans, which on tables with lots of data is very very slow. Thankfully for those using real SQL Server (I tested on 2008 R2), it seems to be able to deal with this query form and generates an efficient query plan, as shown below.

Efficient join between two clustered index scans on SQL Server 2008 R2

So, what can we do to eliminate that subquery from the SQL? Well, we’re causing that subquery by performing a null test against the entity object in the LINQ expression (LINQ to SQL looks at the [test] column to see if there is a joined entity there; if it’s 1, there is, if it’s NULL, there isn’t). So how about this query instead?

var query = from d in context.GetTable<Developer>()

join g in context.GetTable<Game>()

on d.ID equals g.DeveloperID into tempG

from g in tempG.DefaultIfEmpty()

select new

{

d,

//Efficient

Name = g.ID != 0 ? g.Name : String.Empty,

};

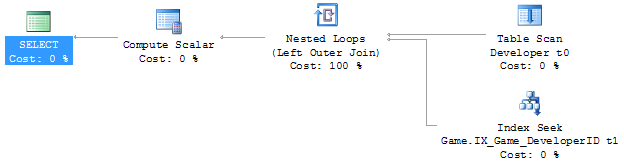

Success! This generates the following SQL and query plan against SQL CE 3.5:

SELECT [t0].[ID], [t0].[Name],

(CASE

WHEN [t1].[ID] <> 0 THEN CONVERT(NVarChar(50),[t1].[Name])

ELSE NULL

END) AS [Name2]

FROM [Developer] AS [t0]

LEFT OUTER JOIN [Game] AS [t1] ON [t0].[ID] = [t1].[DeveloperID]

Efficient join between a table scan and an index seek on SQL CE 3.5

The subquery has been removed from the SQL and the query plan reflects this; it now uses an index seek instead of a second table scan in order to do the join. This is much faster!

Okay, that seems like a simple fix. So when we use it like below, putting g.Name into another object, it should keep working correctly, right?

var query = from d in context.GetTable<Developer>()

join g in context.GetTable<Game>()

on d.ID equals g.DeveloperID into tempG

from g in tempG.DefaultIfEmpty()

select new

{

d,

//Broken

Name = g.ID != 0 ? new Wrap { Str = g.Name } : null

};

Unfortunately, no. You may get an InvalidOperationException at runtime (depending on the data in your DB) with the confusing message “The null value cannot be assigned to a member with type System.Boolean which is a non-nullable value type.”

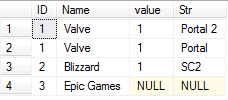

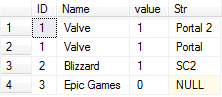

If we look at the SQL generated by this LINQ query and the data returned from the DB, we can see what’s causing this problem:

SELECT [t0].[ID], [t0].[Name],

(CASE

WHEN [t1].[ID] <> 0 THEN 1

WHEN NOT ([t1].[ID] <> 0) THEN 0

ELSE NULL

END) AS [value], [t1].[Name] AS [Str]

FROM [Developer] AS [t0]

LEFT OUTER JOIN [Game] AS [t1] ON [t0].[ID] = [t1].[DeveloperID]

The data returned by the broken query

It’s probably a fair to make the assumption that LINQ to SQL is using the [value] column internally to evaluate the “g.ID != 0” part of the LINQ query, but you’ll notice that in the data the value is NULL for one of the rows. This seems to be what is causing the “can’t assign a null to a bool” error we’re getting. I think this is a bug in LINQ to SQL, because as far as I can tell as this is pretty unintuitive behaviour. Note that this SQL query form, that causes this problem with its CASE, WHEN, WHEN, ELSE expression, is only generated when we project the results into another object, not when we just project the results straight into the main projection object. I don’t know why this is.

So how can we work around this? Prepare to vomit just a little bit, up in the back of your mouth:

var query = from d in context.GetTable<Developer>()

join g in context.GetTable<Game>()

on d.ID equals g.DeveloperID into tempG

from g in tempG.DefaultIfEmpty()

select new

{

d,

//Fixed! (WTF??)

Name = (int?)g.ID != null ? new Wrap { Str = g.Name } : null

};

Mmm, tasty! :S Yes, that C# doesn’t even make sense and a good tool like ReSharper would tell you to remove that pointless int? cast, because ID is already an int and casting it to an int? and checking for null is entirely pointless. But this query form forces LINQ to SQL to generate the SQL we want:

SELECT [t0].[ID], [t0].[Name],

(CASE

WHEN ([t1].[ID]) IS NOT NULL THEN 1

ELSE 0

END) AS [value], [t1].[Name] AS [Str]

FROM [Developer] AS [t0]

LEFT OUTER JOIN [Game] AS [t1] ON [t0].[ID] = [t1].[DeveloperID]

The data returned by the fixed query

Note that the query now returns the expected 0 value instead of NULL in the last row.

Outer Joining and Projecting into an Object Constructor

The other large performance killer Colin and I ran into is where you project into an object constructor. Here’s an example:

var query = from d in context.GetTable<Developer>()

join g in context.GetTable<Game>()

on d.ID equals g.DeveloperID into tempG

from g in tempG.DefaultIfEmpty()

select new

{

d,

//Inefficient

Name = (int?)g.ID != null ? new Wrap(g) : null

};

In the above query we’re passing the whole Game object into Wrap’s constructor, where it’ll copy the Game’s properties to its properties. This makes for neater queries, instead of having a massive object initialiser block where you set all the properties on Wrap with properties from Game. Too bad it reintroduces our little subquery issue back into the SQL:

SELECT [t0].[ID], [t0].[Name],

(CASE

WHEN ([t2].[ID]) IS NOT NULL THEN 1

ELSE 0

END) AS [value], [t2].[test], [t2].[ID] AS [ID2], [t2].[Name] AS [Name2], [t2].[DeveloperID], [t2].[Ordinal]

FROM [Developer] AS [t0]

LEFT OUTER JOIN (

SELECT 1 AS [test], [t1].[ID], [t1].[Name], [t1].[DeveloperID], [t1].[Ordinal]

FROM [Game] AS [t1]

) AS [t2] ON [t0].[ID] = [t2].[DeveloperID]

Unfortunately, the only way to get rid of the subquery again is to ditch the constructor and manually initialise the object with an object initialiser, making your queries much longer and noisy when there are a lot of properties:

var query = from d in context.GetTable<Developer>()

join g in context.GetTable<Game>()

on d.ID equals g.DeveloperID into tempG

from g in tempG.DefaultIfEmpty()

select new

{

d,

//Efficient

Name = (int?)g.ID != null ? new Wrap { Str = g.Name } : null

};

This gives us back our efficient (on SQL CE 3.5) SQL:

SELECT [t0].[ID], [t0].[Name],

(CASE

WHEN ([t1].[ID]) IS NOT NULL THEN 1

ELSE 0

END) AS [value], [t1].[Name] AS [Str]

FROM [Developer] AS [t0]

LEFT OUTER JOIN [Game] AS [t1] ON [t0].[ID] = [t1].[DeveloperID]

Conclusion

For those using LINQ to SQL against real SQL Server, these LINQ contortions are unnecessary to get your performance as real SQL Server is able to make efficient query plans for the form of query that LINQ to SQL creates. However, SQL CE 3.5 can’t deal with these queries and so you need to munge your LINQ queries a bit to get them to perform, which is frustrating. Heading into the future, this won’t be a problem (hopefully) because SQL CE 4 doesn’t support LINQ to SQL and hopefully Entity Framework 4 doesn’t write queries like this (or maybe SQL CE 4 can just deal with it properly). For those on that software stack, it’s probably worth checking out what EF and SQL CE 4 is doing under the covers, as these problems highlight the need for software developers to watch what their LINQ providers are writing for them under the covers to make sure it’s performant.

Edit: For a way to help clean up the verbose hacked up LINQ queries that you end up with when working around these performance problems, check out this post.

Windows Phone 7 Performance Tips and Resources

April 10, 2011 9:57 AM by Daniel Chambers

Following in the same vein as my previous post, this post will detail a number of tips and resources I’ve found for developing on Windows Phone 7, specifically covering optimising application performance. Unfortunately, the phone is not your eight core beast with 16GB of RAM and a graphics card that chews 300W of power when under load, so you need to keep testing your performance constantly and making changes to the way you’re doing things.

Test on Phone Hardware Regularly

The WP7 emulator is pretty good, and you’ll probably find yourself using it all the time to test your application as you write it. However, with regards to performance, how your application runs in the emulator is not representative of how your application will run on an actual phone device. You need to be testing constantly on the phone hardware to make sure your app is running smoothly.

Understand the Frame Rate Counters

The default WP7 project templates have a line in their App.xaml.cs files which turns on the frame rate counters when your app is attached to the debugger. Jeff Wilcox has a great article explaining what these tiny numbers actually mean. Don’t forget that even though the numbers may be high on the emulator, they may not be so good on an actual phone.

Add a Memory Counter Alongside the Frame Rate Counters

Unfortunately, those frame rate counters do not show a number for the total app memory consumed. Memory usage is important to know because WP7 has a 90MB upper limit for apps running on devices with 256MB of RAM. However, Peter Torr has written some code you can use that adds a memory counter next to the frame rate counters.

Understand What is Rendered by the GPU and What is Not

On WP7 there are two threads that handle the UI: the UI thread and the compositor thread. Operations performed on the UI thread are processed by the CPU, and when things are handled by the compositor thread they are done by the GPU. It goes without saying that you want to offload as much as possible onto the GPU, rather than the CPU. However, what gets handled by the compositor thread and what gets handled by the UI thread comes down to exactly what sort of operations you are performing; you don’t have direct control of what executes where. This article on the Telerik Blog explains this concept in more detail.

Use the PerformanceProgressBar

At some point, you’re going to use an indeterminate progress bar in your app to indicate some sort of loading operation. When you do, you may notice your UI performance suffer. Unfortunately, this is because the indeterminate ProgressBar does its fancy animating dots on the UI thread, rather than on the compositor thread. Jeff Wilcox has a good post about this (scroll down to “The back story”). The solution? Use the PerformanceProgressBar in the Silverlight for Windows Phone Toolkit when using indeterminate progress bars, as it implements a workaround for the problem.

Load Images Asynchronously

If you’re loading images from the web to display in Image controls on your UI, you might be tempted to just bind the Image’s Source property in the XAML to a URL in your view model. Don’t do this! Unfortunately, a lot of the downloading and processing of the images is done on the UI thread, so if you have a few images, your performance will suffer. What you need to do is download the images in a background thread, and once they’re downloaded, display them.

Thankfully, David Anson has written a sweet attached property for Images that will do this for you. It’s ridiculously easy to use; you simply set the LowProfileImageLoader.UriSource attached property on the Image instead of the normal Source property. His code will automatically download the image in a background thread, and set the Image’s Source when its done. Go grab his code, or download it from Nuget.

Understand the Performance Issues Involved with a ListBox

The default ListBox on WP7 uses a VirtualizingStackPanel to lay out its items, and its control template includes a ScrollViewer to enable you to scroll up and down through its items. The VirtualizingStackPanel works together with the list box’s ScrollViewer and unloads items that are outside the current view, in order to reduce memory usage. This sounds like a great idea (especially when doing infinite scrolling lists), except on the phone it doesn’t currently perform very well. If you have a long list and scroll through it rapidly, the list will jerk around and you will be able to see the VirtualizingStackPanel loading items as it tries to keep up with your scrolling and fails to do so in time. Unfortunately, due to the limited resources available on phone devices, I haven’t found a clear works-for-everything solution to this problem. However, there are a few solutions that may or may not work for you.

The first potential solution is David Anson’s, from the same PhonePerformance project mentioned in the last tip. He chooses to do away with the VirtualizingStackPanel altogether and use a normal StackPanel. He then supplements it by only loading items further down the list as you scroll down to them; however, once the items are loaded, they loaded and aren’t later removed (like the VirtualizingStackPanel would do). This ensures the scrolling performance is buttery smooth. However when testing this, I found that you need to keep a very close eye on your memory usage, especially when your list items include images. This means this technique is fine if you haven’t got a long/infinite list. If you do, however, you may want to investigate combining this technique with a form of paging. For example, you may do infinite scrolling for (say) five pages of data, then if the user wants to see 6-10, you provide a “next page” button that clears the list and loads page 6, within infinite scrolling loading for pages 7-10.

The second potential solution is Peter Torr’s LazyListBox. Unfortunately, I didn’t get a chance to try this out when struggling with the ListBox performance issues on my recent project, so I can’t comment much on it. I do like its idea of having two ItemTemplates, one for items currently on screen and one for items off screen. This could mean you could remove those Image controls from the off screen items to save yourself memory and CPU usage.

Another cool way of improving performance with ListBoxes (as mentioned on this blog post) is, when adding new items to the list box, add them in small batches rather than all in one go. This allows the UI thread to take a breather and respond to user input faster.

Conclusion

WP7 is a cool platform, but you must never forget that you’re programming for a device that would explode if it even thought of playing Crysis 2. You need to be constantly testing your performance on an actual phone device to ensure your app is staying performant. Hopefully this post has given you a few pointers to some low-hanging performance fruit you can pick.

Windows Phone 7 Developer Tips and Resources

April 08, 2011 7:50 AM by Daniel Chambers

I’ve just recently finished up a Windows Phone 7 (WP7) development project at work, and during the project I collated a number of tips and useful resources that helped make the project a success, and I’m going to share those with you in this post.

Understand the Marketplace Submission Process

If you want to check out exactly what the marketplace submission process entails, the App Hub website has a very detailed walkthrough of the process. Thankfully, it’s not difficult; you just need to provide your XAP, some descriptions, some artwork and set your pricing.

One of the big questions you get asked as a Windows Phone developer is how long it will take for your application to be published on the Marketplace after it’s been submitted. Microsoft recently released some official numbers around that, and they claim that the average time to certification is 1.8 days. Of course, that’s an average, so you shouldn’t rely on that for your planning. Microsoft could take longer if they’re inundated with submissions. That same numbers blog post also says that 62% of applications pass on the first attempt. That means around 40% of apps fail, so you should allocate some time in your schedule to handle a potential submission failure.

Use an MVVM Framework

I chose to use Caliburn Micro as my MVVM Framework. I found that Caliburn Micro helped me because it provided:

- Coroutines support

The coroutines support is awesome and allowed me to write asynchronous code in a non-asynchronous fashion while still actually doing operations asynchronously. If you’ve read about C# 5’s await support, this is sort of like that except implemented using iterator blocks. - Comprehensive MVVM support

One thing I’ve realised about MVVM is that it doesn’t describe a solution to the entire problem, which also includes navigation between, and composition of, views. Caliburn Micro has a concept called Conductors that helps with this, and it also abstracts the WP7 navigation functionality away from you. - Tombstoning support

Tombstoning in WP7 can be a pain, but Caliburn Micro makes it relatively easy. You simply apply attributes to properties in your view model that you want saved when your app gets tombstoned, and Caliburn Micro will automatically restore those properties’ values when your application is restored. It also helps you when you’re using WP7 Tasks that cause your app to get tombstoned before they return you some data the user selected. - Conventions-based data binding

I have a love-hate relationship with Caliburn Micro’s conventions-based data binding. It allows you to omit explicitly defined bindings in your view and Caliburn Micro will do it automatically for you based of its extensible conventions. On the one hand, it makes data binding easy; in particular commanding with ICommand, as it can just link an event in your view and a method on your view model automatically (and run that method as a coroutine, if you like). On the other hand, when something goes wrong, it’s much more difficult to find out why the black magic isn’t working.

The biggest disadvantage to Caliburn Micro is that it adds quite a lot of advanced techniques to your toolbox, which is great if you’re experienced, but can make it harder for people new to your project and unfamiliar with Caliburn Micro to get started. The other disadvantage is that the documentation (at the time of writing) is okay, but in a lot of cases I found I needed to dig through Caliburn Micro’s source code myself to see what was going on. (Tip: create and use a debug build of Caliburn Micro when debugging it, since the Release builds optimise out a lot of methods and make stepping through its code difficult. But don’t forget to switch back to the Release build when you publish to Marketplace).

Use the Silverlight for Windows Phone Toolkit

The default WP7 SDK is strangely missing some of the controls you expect to see there to make a good WP7 application look and feel like the native apps that come on the phone. Turns out that stuff is, for some reason, inside the Silverlight for Windows Phone Toolkit. The toolkit gets you those basic, expected things like the animated transitions between pages, the subtle tilting effect on buttons when you touch them, context menus, date pickers, list pickers, an easier API for gestures, etc.

The best way to learn how to use the stuff in the toolkit is to download the Source & Sample package and take a careful look at the sample code.

Use the Platform’s Theme Resources

WP7 comes with a lot of theming resources that you can reference using the StaticResource markup extension. Keep this MSDN page open in your browser while developing and use it as a reference. I highly recommend you use them everywhere you can, because they help keep your application’s look and feel consistent to the WP7 standards, and also come with the side effect of making your application automatically compatible with the user’s chosen background style (light/dark) and accent colour. So when the user selects black text on a white background, your text will automatically apply that styling. Neat!

While testing your application, I would encourage you to regularly switch between the different background styles and accent colours to make sure your application looks good no matter what theme the user chooses.

Take Advantage of the SDK’s Icon Library

The SDK comes with a library of icons you can use in your application, saved in C:\Program Files (x86)\Microsoft SDKs\Windows Phone\v7.0\Icons. Take advantage of them to ensure your icons are consistent with the WP7 look & feel and are familiar to your users.

Watch out for the Inbuilt HTTP Request Caching

As far as I can tell, WP7 seems to automatically and transparently cache HTTP requests for you based off their caching HTTP headers. This doesn’t seem to be documented on the HttpWebRequest class page, but at least one other person has noticed this behaviour. So if you’re calling a REST service and that REST service is setting cache headers saying cache the result for a day, your users won’t see new data for a day. Keep this in mind and perhaps change your service’s caching headers.

Borrow Code from the Expression Blend Samples to Enable VisualState binding to ViewModels

In Silverlight 4, you might change your visual state based off a property in your view model by using the DataTrigger in XAML. However, since WP7 is a sort of Silverlight 3 with extra bits, it doesn’t have DataTriggers. I chose to borrow some classes from the Expression Blend Samples code (licenced under Ms-PL), in particular the DataStateSwitchBehavior. It’s very elegant and lets me write XAML like this:

<i:Interaction.Behaviors>

<local:DataStateSwitchBehavior Binding="{Binding IsLoading}">

<local:DataStateSwitchCase Value="True" State="IsLoading" />

<local:DataStateSwitchCase Value="False" State="HasLoaded" />

</local:DataStateSwitchBehavior>

<local:DataStateSwitchBehavior Binding="{Binding HasFailed}">

<local:DataStateSwitchCase Value="False" State="HasNotFailed" />

<local:DataStateSwitchCase Value="True" State="HasFailed" />

</local:DataStateSwitchBehavior>

</i:Interaction.Behaviors>

To use the DataStateSwitchBehavior, you will also need to take the BindingListener class, the ConverterHelper class, and the GoToState class. In the example above I’m binding to a bool, but it even works if you bind to an enum.

Recognise and Handle the AG_E_NETWORK_ERROR from a MediaElement Control

While testing your application on a hardware device, you’ll likely be testing it while connected to the PC and the Zune software. However, for some reason the MediaElement control will fail with the AG_E_NETWORK_ERROR when you try to use it. Don’t panic, simply disconnect your phone from the Zune software and try again, or try connecting your phone using the WPConnect tool (C:\Program Files (x86)\Microsoft SDKs\Windows Phone\v7.0\Tools\WPConnect\WPConnect.exe) instead of Zune. Be kind to your users and show them a nice error message telling them to try disconnecting their phones from Zune if you detect that error.

Work Around Issues with the Pivot Control

There are reports on the Internet that the Pivot control has crashing issues when setting its SelectedIndex. Caliburn Micro has a PivotFix class in its WP7 samples you can use to work around it, plus you might like to try the workarounds on this page. However, if you still can’t get it to work (like I couldn’t), try simply slicing and reordering the array of pivot items so the one you want selected is first, which avoids this issue. For example, if you want pivot item C to be first, reorder A,B,C,D,E into C,D,E,A,B. Your users won’t notice the difference since the Pivot control automatically wraps the end of the list to the start and vice versa.

I found it very difficult to tell that it was the Pivot control causing the crashes; my application would trigger a break by the debugger in the App.xaml.cs’s unhandled exception handler method and the exception’s description would be “The parameter is incorrect” and there would be no stack trace (??!). If you see this exception, investigate how you’re using your Pivot control.

Support Infinite Scrolling

Everyone loves infinite scrolling of lists, where more content in the list is loaded dynamically as you scroll down. Unfortunately, WP7’s SDK doesn’t give you any help out of the box in regards to doing this. Thankfully, Daniel Vaughan has developed a neat attached property you can attach to list boxes that will call a data bound ICommand when the user scrolls to the bottom of the list. You can use this to load more data into the ListBox.

Daniel’s ScrollViewerMonitor class uses a BindingListener class, which isn’t the same class as the one you’ve borrowed from Expression Blend Samples, but you can easily modify his code to use that class instead.

Be aware that infinite scrolling will likely require careful monitoring of your memory usage (WP7’s max is currently 90MB for phones with 256MB of RAM); you will probably need to put an upper limit on how far you can scroll. It will probably also mean you’ll be using a VirtualizedStackPanel inside of your ListBox (that’s the default) to keep memory usage down, but keep in mind the performance for scrolling rapidly up and down in a VirtualizedStackPanel-powered ListBox is poor on WP7 at the moment.

Learn How to Hide the System Tray using Visual States

A typical case where you’d want to hide the system tray using visual states is when you’ve got a visual state group for device orientation (ie Portrait and Landscape. Use a DataStateSwitchBehavior and bind it to the Page’s Orientation property). Visual states use animations to change properties on objects, but unfortunately animations in XAML don’t work with “custom” attached properties, such as shell:SystemTray.IsVisible.

To get around that, you’ll have to add that animation to the visual state’s storyboard in code. Here’s some code that goes in your page class’s constructor, underneath the call to InitializeComponent, that hides the system tray when the device is turned horizontally:

ObjectAnimationUsingKeyFrames animation = new ObjectAnimationUsingKeyFrames(); Storyboard.SetTargetProperty(animation, new PropertyPath(SystemTray.IsVisibleProperty)); Storyboard.SetTargetName(animation, "Page"); DiscreteObjectKeyFrame keyFrame = new DiscreteObjectKeyFrame(); keyFrame.Value = false; keyFrame.KeyTime = KeyTime.FromTimeSpan(TimeSpan.Zero); animation.KeyFrames.Add(keyFrame); Landscape.Storyboard.Children.Add(animation); //Landscape is my visual state

I highly recommend hiding the system tray when in landscape orientation, because it takes up a ridiculous amount of room on the side of the screen and looks horrible.

Learn How to Involve the Application Bar in Visual States

Incredibly annoyingly, nothing on a page’s application bar is data-bindable, which instantly makes it very hard to use in an MVVM way, not to mention making it so you can’t do things like hide it or disable certain buttons using visual states in Blend. However, there is a workaround that can enable you to change the application bar’s properties using visual states if you’re willing to write some C#. In your page’s constructor, underneath the call to InitializeComponent, you can set up hooks off your visual states’ animation storyboard’s Completed events and make your changes there. For example:

IsLoading.Storyboard.Completed += (o, a) => Page.ApplicationBar.IsVisible = false; HasLoaded.Storyboard.Completed += (o, a) => Page.ApplicationBar.IsVisible = true;

Conclusion

WP7 is a great platform to develop on because it’s Silverlight; it means if you’ve got some Silverlight (or WPF) experience you can be instantly productive. However, there are some limitations to the platform at the moment and for some things you need to go outside the box to be able to achieve them. Hopefully this post has made some of that easier for you.

More Dynamic Queries using Expression Trees

February 09, 2011 2:42 PM by Daniel Chambers

In my first post on dynamic queries using expression trees, I explained how one could construct an expression tree manually that would take an array (for example, {10,12,14}) and turn it into a query like this:

tag => tag.ID == 10 || tag.ID == 12 || tag.ID == 14

A reader recently wrote to me and asked whether one could form a similar query that instead queried across multiple properties, like this:

tag => tag.ID == 10 || tag.ID == 12 || tag.Name == "C#" || tag.Name == "Expression Trees"

The short answer is “yes, you can”, however the long answer is “yes, but it takes a bit of doing”! In this blog post, I’ll detail how to write a utility method that allows you to create these sorts of queries for any number of properties on an object. (If you haven’t read the previous post, please read it now.)

Previously we had defined a method with this signature (I’ve renamed the “convertBetweenTypes” parameter to “memberAccessExpression”; the original name sucked, frankly; this is a clearer name):

public static Expression<Func<TValue, bool>> BuildOrExpressionTree<TValue, TCompareAgainst>(

IEnumerable<TCompareAgainst> wantedItems,

Expression<Func<TValue, TCompareAgainst>> memberAccessExpression)

Now that we want to query multiple properties, we’ll need to change this signature to something that allows you to pass multiple wantedItems lists and a memberAccessExpression for each of them.

public static Expression<Func<TValue, bool>> BuildOrExpressionTree<TValue>(

IEnumerable<Tuple<IEnumerable<object>, LambdaExpression>> wantedItemCollectionsAndMemberAccessExpressions)

Eeek! That’s a pretty massive new single parameter. What we’re now doing is passing in multiple Tuples (if you’re using .NET 3.5, make your own Tuple class), where the first component is the list of wanted items, and the second component is the member access expression. You’ll notice that a lot of the generic types have gone out the window and we’re passing IEnumerables of object and LambdaExpressions around; this is a price we’ll have to pay for having a more flexible method.

How would you call this monster method? Like this:

var wantedItemsAndMemberExprs = new List<Tuple<IEnumerable<object>, LambdaExpression>>

{

new Tuple<IEnumerable<object>, LambdaExpression>(new object[] {10, 12}, (Expression<Func<Tag, int>>)(t => t.ID)),

new Tuple<IEnumerable<object>, LambdaExpression>(new[] {"C#", "Expression Trees"}, (Expression<Func<Tag, string>>)(t => t.Name)),

};

Expression<Func<Tag, bool>> whereExpr = BuildOrExpressionTree<Tag>(wantedItemsAndMemberExprs);

Note having to explicitly specify “object[]” for the array of IDs; this is because, although you can now assign IEnumerable<ChildClass> to IEnumerable<ParentClass> (covariance) in C# 4, that only works for reference types. Value types are invariant, so you need to explicitly force int to be boxed as a reference type. Note also having to explicitly cast the member access lambda expressions; this is because the C# compiler won’t generate an expression tree for you unless it knows you explicitly want an Expression<T>; casting forces it to understand that you want an expression tree here and not just some anonymous delegate.

So how is the new BuildOrExpressionTree method implemented? Like this:

public static Expression<Func<TValue, bool>> BuildOrExpressionTree<TValue>(

IEnumerable<Tuple<IEnumerable<object>, LambdaExpression>> wantedItemCollectionsAndMemberAccessExpressions)

{

ParameterExpression inputParam = null;

Expression binaryExpressionTree = null;

if (wantedItemCollectionsAndMemberAccessExpressions.Any() == false)

throw new ArgumentException("wantedItemCollectionsAndMemberAccessExpressions may not be empty", "wantedItemCollectionsAndMemberAccessExpressions");

foreach (Tuple<IEnumerable<object>, LambdaExpression> tuple in wantedItemCollectionsAndMemberAccessExpressions)

{

IEnumerable<object> wantedItems = tuple.Item1;

LambdaExpression memberAccessExpr = tuple.Item2;

if (inputParam == null)

inputParam = memberAccessExpr.Parameters[0];

else

memberAccessExpr = new ParameterExpressionRewriter(memberAccessExpr.Parameters[0], inputParam).VisitAndConvert(memberAccessExpr, "BuildOrExpressionTree");

BuildBinaryOrTree(wantedItems, memberAccessExpr.Body, ref binaryExpressionTree);

}

return Expression.Lambda<Func<TValue, bool>>(binaryExpressionTree, new[] { inputParam });

}

As I explain this method, you may want to keep an eye on the expression tree diagram from the previous post, so you can visualise the expression tree structure easily. The method loops through each tuple that contains a wantedItems collection and a memberAccessExpression, and progressively builds an expression tree from all the items in all the collections. You’ll notice within the foreach loop that the ParameterExpression from the first memberAccessExpression is kept and used to “rewrite” subsequent memberAccessExpressions. Each memberAccessExpr is a separate expression tree, each with its own ParameterExpression, but since we’re now using multiple of them and combining them all into a single expression tree that still takes a single parameter, we need to ensure that those expressions use a common ParameterExpression. We do this by implementing an ExpressionVisitor that rewrites the expression and replaces the ParameterExpression it uses with the one we want it to use.

public class ParameterExpressionRewriter : ExpressionVisitor

{

private ParameterExpression _OldExpr;

private ParameterExpression _NewExpr;

public ParameterExpressionRewriter(ParameterExpression oldExpr, ParameterExpression newExpr)

{

_OldExpr = oldExpr;

_NewExpr = newExpr;

}

protected override Expression VisitParameter(ParameterExpression node)

{

if (node == _OldExpr)

return _NewExpr;

else

return base.VisitParameter(node);

}

}

The ExpressionVisitor uses the visitor pattern, so it recurses through an expression tree and calls different methods on the class depending on what node type it encounters and allows you to rewrite the tree by returning something different from the method. In the VisitParameter method above, we’re simply returning the new ParameterExpression when we encounter the old ParameterExpression in the tree. Note that ExpressionVisitor is new to .NET 4, so if you’re stuck in 3.5-land use this similar implementation instead. (For more information on modifying expression trees, see this MSDN page.)

Going back to the BuildOrExpressionTree method, we see the next thing we do is call the BuildBinaryOrTree method. Note that this method is slightly different to the implementation in the previous post, as I’ve changed it to be a faster iterative algorithm (rather than recursive) and it no longer is generic. The method should look pretty familiar:

private static void BuildBinaryOrTree(

IEnumerable<object> items,

Expression memberAccessExpr,

ref Expression expression)

{

foreach (object item in items)

{

ConstantExpression constant = Expression.Constant(item, item.GetType());

BinaryExpression comparison = Expression.Equal(memberAccessExpr, constant);

if (expression == null)

expression = comparison;

else

expression = Expression.OrElse(expression, comparison);

}

}

As you can see, for each iteration in the main BuildBinaryOrExpressionTree, the existing binary OR tree is fed back into the BuildBinaryOrTree method and extended with more nodes, except each different call uses items from a different collection and a different memberAccessExpression to extend the tree. Once all Tuples have been processed, the binary OR tree is bound together with its ParameterExpression and turned into the LambdaExpression we need for use in an IQueryable Where method. We can use it like this:

Expression<Func<Tag, bool>> whereExpr = BuildOrExpressionTree<Tag>(wantedItemsAndMemberExprs); IQueryable<Tag> tagQuery = tags.Where(whereExpr);

In conclusion, we see that wanting to query those additional properties required us to add a whole bunch more code in order to make it work. However, in the end, it does work and works quite well, although admittedly the method is a little awkward to use. This could be cleaned up by wrapping it in a “builder”-style class that simplifies the API a little, but I’ll leave that as an exercise to the reader.

Combining multiple assemblies into a single EXE for a WPF application

December 23, 2010 4:22 PM by Daniel Chambers

Recently I’ve been writing a small WPF application for work where the goal is to be able to allow its users to download a single .exe file onto a server machine they are working on, use the executable, then delete it once they are done on that server. The servers in question have a strict software installation policy, so this application cannot have an installer and therefore must be as easy to ‘deploy’ as possible. Unfortunately, pretty much every .NET project is always going to reference some 3rd party assemblies that will be placed alongside the executable upon deploy. Microsoft has a tool called ILMerge that is capable of merging .NET assemblies together, except that it is unable to do so for WPF assemblies, since they contain XAML which contains baked in assembly references. While thinking about the issue, I supposed that I could’ve simply provided our users with a zip file that contained a folder with the WPF executable and its referenced assemblies inside it, and get them to extract that, go into the folder and find and run the executable, but that just felt dirty.

Searching around the internet found me a very useful post by an adventuring New Zealander, which introduced me to the idea of storing the referenced assemblies inside the WPF executable as embedded resources, and then using an assembly resolution hook to load the the assembly out of the resources and provide it to the CLR for use. Unfortunately, our swashbuckling New Zealander’s code didn’t work for my particular project, as it set up the assembly resolution hook after my application was trying to find its assemblies. He also didn’t mention a clean way of automatically including those referenced assemblies as resources, which I wanted as I didn’t want to be manually including my assemblies as resources in my project file. However, his blog post planted the seed of what is to come, so props to him for that.

I dug around in MSBuild and figured out a way of hooking off the normal build process and dynamically adding any project references that are going to be copied locally (ie copied into the bin directory, alongside the exe file) as resources to be embedded. This turned out to be quite simple (this code snippet should be added to your project file underneath where the standard Microsoft.CSharp.targets file is imported):

<Target Name="AfterResolveReferences">

<ItemGroup>

<EmbeddedResource Include="@(ReferenceCopyLocalPaths)" Condition="'%(ReferenceCopyLocalPaths.Extension)' == '.dll'">

<LogicalName>%(ReferenceCopyLocalPaths.DestinationSubDirectory)%(ReferenceCopyLocalPaths.Filename)%(ReferenceCopyLocalPaths.Extension)</LogicalName>

</EmbeddedResource>

</ItemGroup>

</Target>

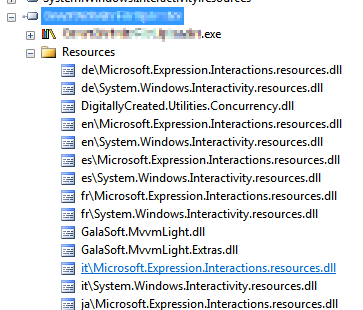

Figure 1. Copy-Local references saved as embedded resources

The AfterResolveReferences target is a target defined by the normal build process, but deliberately left empty so you can override it and inject your own logic into the build. It happens after the ResolveAssemblyReference task is run; that task follows up your project references and determines their physical locations and other properties, and it just happens to output the ReferenceCopyLocalPaths item which contains the paths of all the assemblies that are copy-local assemblies. So our task above creates a new EmbeddedResource item for each of these paths, excluding all the paths that are not to .dll files (for example, the associated .pdb and .xml files). The name of the embedded resource (the LogicalName) is set to be the path and filename of the assembly file. Why the path and not just the filename, you ask? Well, some assemblies are put under subdirectories in your bin folder because they have the same file name, but differ in culture (for example, Microsoft.Expression.Interactions.resources.dll & System.Windows.Interactivity.resources.dll). If we didn’t include the path in the resource name, we would get conflicting resource names. The results of this MSBuild task can be seen in Figure 1.

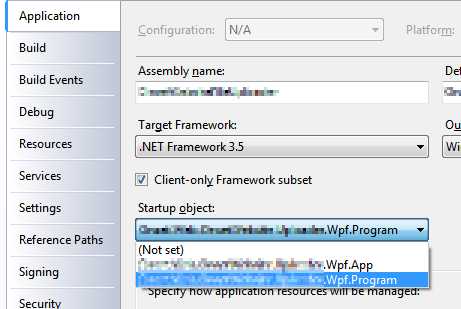

Figure 2. Select your program entry point

Once I had all the copy-local assemblies stored safely inside the executable as embedded resources, I figured out a way of getting the assembly resolution hook hooked up before any WPF code starts (and therefore requiring my copy-local assemblies to be loaded before the hook is set up). Normally WPF applications contain an App.xaml file, which acts as a magic entry point to the application and launches the first window. However, the App.xaml isn’t actually that magical. If you look inside the obj folder in your project folder, you will find an App.g.cs file, which is generated from your App.xaml. It contains a normal “static void Main” C# entry point. So in order to get in before WPF, all you need to do is define your own entry point in a new class, do what you need to, then call the normal WPF entry-point and innocently act like nothing unusual has happened. (This will require you to change your project settings and specifically choose your application’s entry point (see Figure 2)). This is what my class looked like:

public class Program

{

[STAThreadAttribute]

public static void Main()

{

App.Main();

}

}

Don’t forget the STAThreadAttribute on Main; if you leave it out, your application will crash on startup. With this class in place, I was able to easily hook in my custom assembly loading code before the WPF code ran at all:

public class Program

{

[STAThreadAttribute]

public static void Main()

{

AppDomain.CurrentDomain.AssemblyResolve += OnResolveAssembly;

App.Main();

}

private static Assembly OnResolveAssembly(object sender, ResolveEventArgs args)

{

Assembly executingAssembly = Assembly.GetExecutingAssembly();

AssemblyName assemblyName = new AssemblyName(args.Name);

string path = assemblyName.Name + ".dll";

if (assemblyName.CultureInfo.Equals(CultureInfo.InvariantCulture) == false)

{

path = String.Format(@"{0}\{1}", assemblyName.CultureInfo, path);

}

using (Stream stream = executingAssembly.GetManifestResourceStream(path))

{

if (stream == null)

return null;

byte[] assemblyRawBytes = new byte[stream.Length];

stream.Read(assemblyRawBytes, 0, assemblyRawBytes.Length);

return Assembly.Load(assemblyRawBytes);

}

}

}

The code above registers for the AssemblyResolve event off of the current application domain. That event is fired when the CLR is unable to locate a referenced assembly and allows you to provide it with one. The code checks if the wanted assembly has a non-invariant culture and if it does, attempts to load it from the “subfolder” (really just a prefix on the resource name) named after the culture. This bit is what I assume .NET does when it looks for those assemblies normally, but I haven’t seen any documentation to confirm it, so keep an eye on that part’s behaviour when you use it. The code then goes on to load the assembly out of the resources and return it to the framework for use. This code is slightly improved from our daring New Zealander’s code (other than the culture behaviour) as it handles the case where the assembly can’t be found in the resources and simply returns null (after which your program will crash with an exception complaining about the missing assembly, which is a tad clearer than the NullReferenceException you would have got otherwise).

In conclusion, all these changes together mean you can simply hit build in your project and the necessary assemblies will be automatically included as resources in your executable to be pulled out at runtime and loaded by the assembly resolution hook. This means you can simply copy just your executable to any location without its associated referenced assemblies and it will run just fine.

Automatically recording the Mercurial revision hash using MSBuild

November 12, 2010 2:33 PM by Daniel Chambers

On one of the websites I’ve worked on recently we chose to display the website’s version ID at the bottom of each page. Since we use Mercurial for version control (it’s totally awesome, by the way. I hope to never go back to Subversion), that means we display a truncated copy of the revision’s hash. The website is a pet project and my friend and I manage it informally, so having the hash displayed there allows us to easily remember which version is currently running on Live. It’s an ASP.NET MVC site, so I created a ConfigurationSection that I separated out into its own Revision.config file, into which we manually copy and paste the revision hash just before we upload the new version to the live server. As VS2010’s new web publishing features means that publishing a directly deployable copy of the website is literally a one-click affair, this manual step galled me. So I set out to figure out how I could automate it.

I spent a while digging around in the undocumented mess that is the MSBuild script that backs the web publishing features (as I discussed in a previous blog) and learning about MSBuild and I eventually developed a final implementation which is actually quite simple. The first step was to get the Mercurial revision hash into MSBuild; to do this I developed a small MSBuild task that simply uses the command-line hg.exe to get the hash and parses it out of its console output. The code is pretty self-explanatory, so take a look:

public class MercurialVersionTask : Task

{

[Required]

public string RepositoryPath { get; set; }

[Output]

public string MercurialVersion { get; set; }

public override bool Execute()

{

try

{

MercurialVersion = GetMercurialVersion(RepositoryPath);

Log.LogMessage(MessageImportance.Low, String.Format("Mercurial revision for repository \"{0}\" is {1}", RepositoryPath, MercurialVersion));

return true;

}

catch (Exception e)

{

Log.LogError("Could not get the mercurial revision, unhandled exception occurred!");

Log.LogErrorFromException(e, true, true, RepositoryPath);

return false;

}

}

private string GetMercurialVersion(string repositoryPath)

{

Process hg = new Process();

hg.StartInfo.UseShellExecute = false;

hg.StartInfo.RedirectStandardError = true;

hg.StartInfo.RedirectStandardOutput = true;

hg.StartInfo.CreateNoWindow = true;

hg.StartInfo.FileName = "hg";

hg.StartInfo.Arguments = "id";

hg.StartInfo.WorkingDirectory = repositoryPath;

hg.Start();

string output = hg.StandardOutput.ReadToEnd().Trim();

string error = hg.StandardError.ReadToEnd().Trim();

Log.LogMessage(MessageImportance.Low, "hg.exe Standard Output: {0}", output);

Log.LogMessage(MessageImportance.Low, "hg.exe Standard Error: {0}", error);

hg.WaitForExit();

if (String.IsNullOrEmpty(error) == false)

throw new Exception(String.Format("hg.exe error: {0}", error));

string[] tokens = output.Split(' ');

return tokens[0];

}

}

I created a new MsBuild project in DigitallyCreated Utilities to house this class (and any others I may develop in the future). At the time of writing, you’ll need to get the code from the repository and compile it yourself, as I haven’t released an official build with it in it yet.

I then needed to start using this task in the website’s project file. A one-liner near the top of the file imports it and makes it available for use:

<UsingTask AssemblyFile="..\lib\DigitallyCreated.Utilities.MsBuild.dll" TaskName="DigitallyCreated.Utilities.MsBuild.MercurialVersionTask" />

Next, I wrote the target that would use this task to set the hash into the Revision.config file. I decided to use the really nice tasks provided by the MSBuild Extension Pack project to do this. This meant I needed to also import their tasks into the project (after installing the pack, of course), in at the top of the file:

<PropertyGroup>

<ExtensionTasksPath>$(MSBuildExtensionsPath32)\ExtensionPack\4.0\</ExtensionTasksPath>

</PropertyGroup>

<Import Project="$(ExtensionTasksPath)MSBuild.ExtensionPack.tasks" />

Writing the hash-setting target was very easy:

<Target Name="SetMercurialRevisionInConfig">

<DigitallyCreated.Utilities.MsBuild.MercurialVersionTask RepositoryPath="$(MSBuildProjectDirectory)">

<Output TaskParameter="MercurialVersion" PropertyName="MercurialVersion" />

</DigitallyCreated.Utilities.MsBuild.MercurialVersionTask>

<MSBuild.ExtensionPack.Xml.XmlFile File="$(_PackageTempDir)\Revision.config" TaskAction="UpdateAttribute" XPath="/revision" Key="hash" Value="$(MercurialVersion)" />

</Target>

The MercurialVersionTask is called, which gets the revision hash and puts it into the MecurialVersion property (as specified by the nested Output tag). The XmlFile task sets that hash into the Revision.config, which is found in the directory specified by _PackageTempDir. That directory is the directory that the VS2010 web publishing pipeline puts the project files while it is packaging them for a publish. That property is set by their MSBuild code; it is, however, subject to disappear in the future, as indicated by the underscore in the name that tells you that it’s a ‘private’ property, so be careful there.

Next I needed to find a place in the VS2010 web publishing MSBuild pipeline where I could hook in that target. Thankfully, the pipeline allows you to easily hook in your own targets by setting properties containing the names of the targets you’d like it to run. So, inside the first PropertyGroup tag at the top of the project file, I set this property, hooking in my target to be run after the PipelinePreDeployCopyAllFilesToOneFolder target:

<OnAfterPipelinePreDeployCopyAllFilesToOneFolder>SetMercurialRevisionInConfig;</OnAfterPipelinePreDeployCopyAllFilesToOneFolder>

This ensures that the target will be run after the CopyAllFilesToSingleFolderForPackage target runs (that target is run by the PipelinePreDeployCopyAllFilesToOneFolder target). The CopyAllFilesToSingleFolderForPackage target copies the project files into your obj folder (specifically the folder specified by _PackageTempDir) in preparation for a publish (this is discussed in a little more detail in that previous post).

And that was it! Upon publishing using Visual Studio (or at the command-line using the process detailed in that previous post), the SetMercurialRevisionInConfig target is called by the web publishing pipeline and sets the hash into the Revision.config file. This means that a deployable build of our website can literally be created with a single click in Visual Studio. Projects that use a continuous integration server to build their projects would also find this very useful.

Locally publishing a VS2010 ASP.NET web application using MSBuild

November 04, 2010 3:13 AM by Daniel Chambers

Visual Studio 2010 has great new Web Application Project publishing features that allow you to easy publish your web app project with a click of a button. Behind the scenes the Web.config transformation and package building is done by a massive MSBuild script that’s imported into your project file (found at: C:\Program Files (x86)\MSBuild\Microsoft\VisualStudio\v10.0\Web\Microsoft.Web.Publishing.targets). Unfortunately, the script is hugely complicated, messy and undocumented (other then some oft-badly spelled and mostly useless comments in the file). A big flowchart of that file and some documentation about how to hook into it would be nice, but seems to be sadly lacking (or at least I can’t find it).

Unfortunately, this means performing publishing via the command line is much more opaque than it needs to be. I was surprised by the lack of documentation in this area, because these days many shops use a continuous integration server and some even do automated deployment (which the VS2010 publishing features could help a lot with), so I would have thought that enabling this (easily!) would be have been a fairly main requirement for the feature.

Anyway, after digging through the Microsoft.Web.Publishing.targets file for hours and banging my head against the trial and error wall, I’ve managed to figure out how Visual Studio seems to perform its magic one click “Publish to File System” and “Build Deployment Package” features. I’ll be getting into a bit of MSBuild scripting, so if you’re not familiar with MSBuild I suggest you check out this crash course MSDN page.

Publish to File System

The VS2010 Publish To File System Dialog

Publish to File System took me a while to nut out because I expected some sensible use of MSBuild to be occurring. Instead, VS2010 does something quite weird: it calls on MSBuild to perform a sort of half-deploy that prepares the web app’s files in your project’s obj folder, then it seems to do a manual copy of those files (ie. outside of MSBuild) into your target publish folder. This is really whack behaviour because MSBuild is designed to copy files around (and other build-related things), so it’d make sense if the whole process was just one MSBuild target that VS2010 called on, not a target then a manual copy.

This means that doing this via MSBuild on the command-line isn’t as simple as invoking your project file with a particular target and setting some properties. You’ll need to do what VS2010 ought to have done: create a target yourself that performs the half-deploy then copies the results to the target folder. To edit your project file, right click on the project in VS2010 and click Unload Project, then right click again and click Edit. Scroll down until you find the Import element that imports the web application targets (Microsoft.WebApplication.targets; this file itself imports the Microsoft.Web.Publishing.targets file mentioned earlier). Underneath this line we’ll add our new target, called PublishToFileSystem:

<Target Name="PublishToFileSystem" DependsOnTargets="PipelinePreDeployCopyAllFilesToOneFolder">

<Error Condition="'$(PublishDestination)'==''" Text="The PublishDestination property must be set to the intended publishing destination." />

<MakeDir Condition="!Exists($(PublishDestination))" Directories="$(PublishDestination)" />

<ItemGroup>

<PublishFiles Include="$(_PackageTempDir)\**\*.*" />

</ItemGroup>

<Copy SourceFiles="@(PublishFiles)" DestinationFiles="@(PublishFiles->'$(PublishDestination)\%(RecursiveDir)%(Filename)%(Extension)')" SkipUnchangedFiles="True" />

</Target>

This target depends on the PipelinePreDeployCopyAllFilesToOneFolder target, which is what VS2010 calls before it does its manual copy. Some digging around in Microsoft.Web.Publishing.targets shows that calling this target causes the project files to be placed into the directory specified by the property _PackageTempDir.

The first task we call in our target is the Error task, upon which we’ve placed a condition that ensures that the task only happens if the PublishDestination property hasn’t been set. This will catch you and error out the build in case you’ve forgotten to specify the PublishDestination property. We then call the MakeDir task to create that PublishDestination directory if it doesn’t already exist.

We then define an Item called PublishFiles that represents all the files found under the _PackageTempDir folder. The Copy task is then called which copies all those files to the Publish Destination folder. The DestinationFiles attribute on the Copy element is a bit complex; it performs a transform of the items and converts their paths to new paths rooted at the PublishDestination folder (check out Well-Known Item Metadata to see what those %()s mean).

To call this target from the command-line we can now simply perform this command (obviously changing the project file name and properties to suit you):

msbuild Website.csproj "/p:Platform=AnyCPU;Configuration=Release;PublishDestination=F:\Temp\Publish" /t:PublishToFileSystem

Build Deployment Package

The VS2010 Build Deployment Package command

Thankfully, Build Deployment Package is a lot easier to do from the command line because Visual Studio doesn’t do any manual outside-of-MSBuild stuff; it simply calls an MSBuild target (as it should!). To do this from the command-line, you can simply call on MSBuild like this (again substituting your project file name and property values):

msbuild Website.csproj "/p:Platform=AnyCPU;Configuration=Release;DesktopBuildPackageLocation=F:\Temp\Publish\Website.zip" /t:Package

Note that you can omit the DesktopBuildPackageLocation property from the command line if you’ve already set it by setting something using the VS2010 Package/Publish Web project properties UI (setting the “Location where package will be created” textbox). If you omit it without customising the value in the VS project properties UI, the location will be defaulted to the project’s obj\YourCurrentConfigurationHere\Package folder.

Conclusion

In conclusion, we’ve seen how it’s fairly easy to configure your project file to support publishing your ASP.NET web application to a local file system directory using MSBuild from the command line, just like Visual Studio 2010 does (fairly easy once the hard investigative work is done!). We’ve also seen how you can use MSBuild at the command-line to build a deployment package. Using this new knowledge you can now open your project up to the world of automation, where you could, for example, get your continuous integration server to create deployable builds of your web application for you upon each commit to your source control repository.

Removing a Windows System Certificate Store

October 18, 2010 11:30 AM by Daniel Chambers

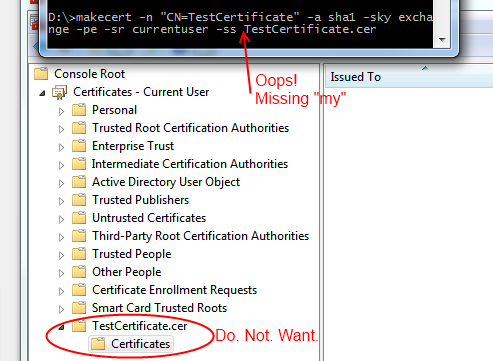

Recently I managed to add an extra certificate store to Windows by mistake, as I accidentally left out a command line argument when using makecert. Unfortunately, the Certificates MMC snap-in doesn’t seem to provide a way for you to delete a certificate store, so I had resort to a more technical approach in order to get rid of this new unwanted certificate store.

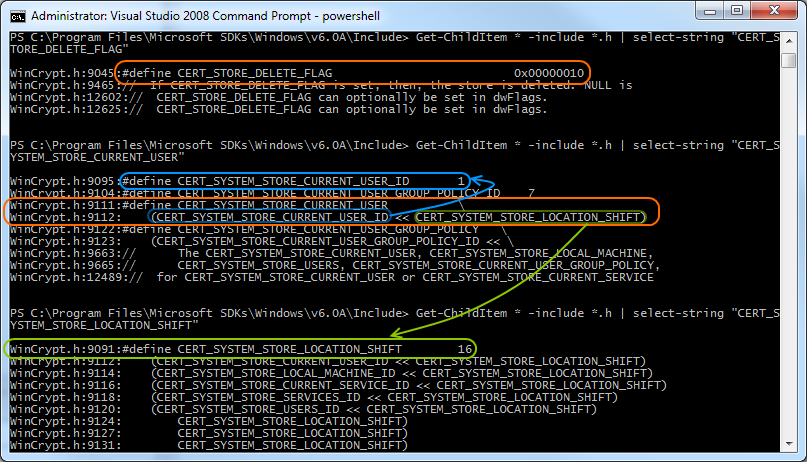

Digging around in the Windows API, I found the CertUnregisterSystemStore function that allows you to delete a certificate store programmatically. So I spun up my copy of LINQPad so that I could quickly script in C# and PInvoke that function. (Incidentally, if you don’t have a copy of LINQPad and you’re a .NET developer, you need to get yourself a copy immediately. It’s invaluable.) Unfortunately, CertUnregisterSystemStore takes a flags parameter, and the actual values of the different flags are defined in C++ .h files, which are not available from C#. So I punched out a few PowerShell commands to search the .h files in the Windows SDK for those #define lines.

Once those flag values were found, deleting the store ended up being this small C# script in LINQPad that simply calls CertUnregisterSystemStore with the appropriate flags:

void Main()

{

int CERT_SYSTEM_STORE_LOCATION_SHIFT = 16;

uint CERT_SYSTEM_STORE_CURRENT_USER_ID = 1;

uint CERT_SYSTEM_STORE_LOCAL_MACHINE_ID = 2;

uint CERT_STORE_DELETE_FLAG = 0x10;

uint CERT_SYSTEM_STORE_CURRENT_USER = CERT_SYSTEM_STORE_CURRENT_USER_ID << CERT_SYSTEM_STORE_LOCATION_SHIFT;

uint CERT_SYSTEM_STORE_LOCAL_MACHINE = CERT_SYSTEM_STORE_LOCAL_MACHINE_ID << CERT_SYSTEM_STORE_LOCATION_SHIFT;

CertUnregisterSystemStore("TestCertificate.cer", CERT_STORE_DELETE_FLAG | CERT_SYSTEM_STORE_CURRENT_USER);

}

[DllImport("crypt32.dll", CharSet = CharSet.Unicode)]

public static extern bool CertUnregisterSystemStore(string systemStore, uint flags);

I’ve also included the flags needed to delete stores from the local machine (as opposed to the current user account) in the script above, in case anyone ever needs to do that.

Getting the Correct HTTP Status Codes out of ASP.NET Custom Error Pages

September 08, 2010 2:27 PM by Daniel Chambers

If you are using the default ASP.NET custom error pages, chances are your site is returning the incorrect HTTP status codes for the errors that your users are experiencing (hopefully as few as possible!). Sure, your users see a pretty error page just fine, but your users aren’t always flesh and blood. Search engine crawlers are also your users (in a sense), and they don’t care about the pretty pictures and funny one-liners on your error pages; they care about the HTTP status codes returned. For example, if a request for a page that was removed consistently returns a 404 status code, a search engine will remove it from its index. However, if it doesn’t and instead returns the wrong error code, the search engine may leave the page in its index.

This is what happens if your non-existent pages don't return the correct status code!

Unfortunately, ASP.NET custom error pages don’t return the correct error codes. Here’s your typical ASP.NET custom error page configuration that goes into the Web.config:

<customErrors mode="On" defaultRedirect="~/Pages/Error">

<error statusCode="403" redirect="~/Pages/Error403" />

<error statusCode="404" redirect="~/Pages/Error404" />

</customErrors>

And here’s a Fiddler trace of what happens when someone request a page that should simply return 404:

A trace of a request that should have just 404'd

As you can see, the request for /ThisPageDoesNotExist returned 302 and redirected the browser to the error page specified in the config (/Pages/Error404), but that page returned 200, which is the code for a successful request! Our human users wouldn’t notice a thing, as they’d see the error page displayed in their browser, but any search engine crawler would think that the page existed just fine because of the 200 status code! And this problem also occurs for other status codes, like 500 (Internal Server Error). Clearly, we have an issue here.

The first thing we need to do is stop the error pages from returning 200 and instead return the correct HTTP status code. This is easy to do. In the case of the 404 Not Found page, we can simply add this line in the view:

<% Response.StatusCode = (int)HttpStatusCode.NotFound; %>

We will need to do this to all views that handle errors (obviously changing the status code to the one appropriate for that particular error). This not only includes the pages you’ve configured in the customErrors element in the Web.config, but also any views you are using with ASP.NET MVC HandleError attributes (if you’re using ASP.NET MVC). After making these changes, our Fiddler trace looks like this:

A trace of a request that is 404ing, but still redirecting

We’ve now got the correct status code being returned, but there’s still an unnecessary redirect going on here. Why redirect when we can just return 404 and the error page HTML straight up? If we are using vanilla ASP.NET Forms, this is super easy to do with a quick configuration change; just set redirectMode to ResponseRewrite in the Web.config (this setting is new since .NET 3.5 SP1):

<customErrors mode="On" defaultRedirect="~/Error.aspx" redirectMode="ResponseRewrite">

<error statusCode="403" redirect="~/Error403.aspx" />

<error statusCode="404" redirect="~/Error404.aspx" />

</customErrors>

Unfortunately, this trick doesn’t work with ASP.NET MVC, as the method by which the response is rewritten using the specified error page doesn’t play nicely with MVC routes. One workaround is to use static HTML pages for your error pages; this sidesteps MVC routing, but also means you can’t use your master pages, making it a pretty crap solution. The easiest workaround I’ve found is to defenestrate ASP.NET custom errors and handle the errors manually through a bit of trickery in the Global.asax.

Firstly, we’ll handle the Error event in our Global.asax HttpApplication-derived class:

protected void Application_Error(object sender, EventArgs e)

{

if (Context.IsCustomErrorEnabled)

ShowCustomErrorPage(Server.GetLastError());

}

private void ShowCustomErrorPage(Exception exception)

{

HttpException httpException = exception as HttpException;

if (httpException == null)

httpException = new HttpException(500, "Internal Server Error", exception);

Response.Clear();

RouteData routeData = new RouteData();

routeData.Values.Add("controller", "Error");

routeData.Values.Add("fromAppErrorEvent", true);

switch (httpException.GetHttpCode())

{

case 403:

routeData.Values.Add("action", "AccessDenied");

break;

case 404:

routeData.Values.Add("action", "NotFound");

break;

case 500:

routeData.Values.Add("action", "ServerError");

break;

default:

routeData.Values.Add("action", "OtherHttpStatusCode");

routeData.Values.Add("httpStatusCode", httpException.GetHttpCode());

break;

}

Server.ClearError();

IController controller = new ErrorController();

controller.Execute(new RequestContext(new HttpContextWrapper(Context), routeData));

}

In Application_Error, we’re checking the setting in Web.config to see whether custom errors have been turned on or not. If they have been, we call ShowCustomErrorPage and pass in the exception. In ShowCustomErrorPage, we convert any non-HttpException into a 500-coded error (Internal Server Error). We then clear any existing response and set up some route values that we’ll be using to call into MVC controller-land later. Depending on which HTTP status code we’re dealing with (pulled from the HttpException), we target a different action method, and in the case that we’re dealing with an unexpected status code, we also pass across the status code as a route value (so that view can set the correct HTTP status code to use, etc). We then clear the error and new up our MVC controller, then execute it.

Now we’ll define that ErrorController that we used in the Global.asax.

public class ErrorController : Controller

{

[PreventDirectAccess]

public ActionResult ServerError()

{

return View("Error");

}

[PreventDirectAccess]

public ActionResult AccessDenied()

{

return View("Error403");

}

public ActionResult NotFound()

{

return View("Error404");

}

[PreventDirectAccess]

public ActionResult OtherHttpStatusCode(int httpStatusCode)

{

return View("GenericHttpError", httpStatusCode);

}

private class PreventDirectAccessAttribute : FilterAttribute, IAuthorizationFilter

{

public void OnAuthorization(AuthorizationContext filterContext)

{

object value = filterContext.RouteData.Values["fromAppErrorEvent"];

if (!(value is bool && (bool)value))

filterContext.Result = new ViewResult { ViewName = "Error404" };

}

}

This controller is pretty simple except for the PreventDirectAccessAttribute that we’re using there. This attribute is an IAuthorizationFilter which basically forces the use of the Error404 view if any of the action methods was called through a normal request (ie. if someone tried to browse to /Error/ServerError). This effectively hides the existence of the ErrorController. The attribute knows that the action method is being called through the error event in the Global.asax by looking for the “fromAppErrorEvent” route value that we explicitly set in ShowCustomErrorPage. This route value is not set by the normal routing rules and therefore is missing from a normal page request (ie. requests to /Error/ServerError etc).

In the Web.config, we can now delete most of the customErrors element; the only thing we keep is the mode switch, which still works thanks to the if condition we put in Application_Error.

<customErrors mode="On">

<!-- There is custom handling of errors in Global.asax -->

</customErrors>

If we now look at the Fiddler trace, we see the problem has been solved; the redirect is gone and the page correctly returns a 404 status code.

A trace showing the correct 404 behaviour

In conclusion, we’ve looked at a way to solve ASP.NET custom error pages returning incorrect HTTP status codes to the user. For ASP.NET Forms users the solution was easy, but for ASP.NET MVC users some extra manual work needed to be done. These fixes ensure that search engines that trawl your website don’t treat any error pages they encounter (such as a 404 Page Not Found error page) as actual pages.

Performing Date & Time Arithmetic Queries using Entity Framework v1

June 07, 2010 3:21 PM by Daniel Chambers (last modified on June 09, 2010 2:58 AM)

If one is writing legacy code in .NET 3.5 SP1’s Entity Framework v1 (yes, your brand new code has now been put into the legacy category by .NET 4 :( ), one will find a severe lack of any date & time arithmetic ability. If you look at LINQ to Entities, you will see no date & time arithmetic methods are recognised. And if you look at the canonical functions that Entity SQL provides, you will notice a severe lack of any date & time arithmetic functions. Thankfully, this strangely missing functionality in canonical ESQL has been resolved as of .NET 4; however, that doesn’t help those of us who haven’t yet upgraded to Visual Studio 2010! Thankfully, all is not lost: there is a solution that only sucks a little bit.

Date & time arithmetic is a pretty necessary ability. Imagine the following simplified scenario, where one has a “Contract” entity that defines a business contract that starts at a certain date and lasts for a duration:

Imagine that you need to perform a query that finds all Contracts that were active on a specific date. You may think of performing a LINQ to Entities query that looks like this:

IQueryable<Contract> contracts =

from contract in Contracts

where activeOnDate >= contract.StartDate &&

activeOnDate < contract.StartDate.AddMonths(contract.Duration)

select contract

However, this is not supported by LINQ to Entities and you’ll get a NotSupportedException. With canonical ESQL missing any defined date & time arithmetic functions, it seems we are left without a recourse.

However, if one digs around in MSDN, one may stumble across the fact that the SQL Server Entity Framework provider defines some provider-specific ESQL functions with which one can use to do date & time arithmetic. So we can write an ESQL query to get the functionality we wish:

const string eSqlQuery = @"SELECT VALUE c FROM Contracts AS c

WHERE @activeOnDate >= c.StartDate

AND @activeOnDate < SqlServer.DATEADD('months', c.Duration, c.StartDate)";

ObjectQuery<Contract> contracts =

context.CreateQuery<Contract>(eSqlQuery, new ObjectParameter("activeOnDate", activeOnDate));

Note the use of the SqlServer.DATEADD ESQL function. The “SqlServer” bit is specifying the SQL Server provider’s specific namespace. Obviously this approach has some disadvantages: it’s not as nice as using LINQ to Entities, and it’s also not database provider agnostic (so if you’re using Oracle you’ll need to see whether your Oracle provider has something like this). However, the alternatives are either to write SQL manually (negating the usefulness of Entity Framework) or to download all the entities into local memory and use LINQ to Objects (almost never, ever an acceptable option!).

Notice that using the CreateQuery function to create the query from ESQL returned an ObjectQuery<T> (which implements IQueryable<T>)? This means that you can now use LINQ to Entities to change that query. For example, what if we wanted to perform some further filtering that can be customised by the user (the user’s filtering preferences are set in the ContractFilterSettings class in the below example):

private ObjectQuery<Contract> CreateFilterSettingsAsQueryable(ContractFilterSettings filterSettings, MyEntitiesContext context)

{

IQueryable<Contract> query = context.Contracts;

if (filterSettings.ActiveOnDate != null)

{

const string eSqlQuery = @"SELECT VALUE c FROM Contracts AS c

WHERE @activeOnDate >= c.StartDate

AND @activeOnDate < SqlServer.DATEADD('months', c.Duration, c.StartDate)";

query = context.CreateQuery<Contract>(eSqlQuery, new ObjectParameter("activeOnDate", filterSettings.ActiveOnDate));

}

if (filterSettings.SiteId != null)

query = query.Where(c => c.SiteID == filterSettings.SiteId);

if (filterSettings.ContractNumber != null)

query = query.Where(c => c.ContractNumber == filterSettings.ContractNumber);

return (ObjectQuery<Contract>)query;

}

Being able to continue to use LINQ to Entities after creating the initial query in ESQL means that you can have the best of both worlds (forgetting the fact that we ought to be able to do date & time arithmetic in LINQ to Entities in the first place!). Note that although you can start a query in ESQL and then add to it with LINQ to Entities, you cannot start a query in LINQ to Entities and add to it with ESQL, hence why the ActiveOnDate filter setting was done first.

For those who use .NET 4, unfortunately you’re still stuck with being unable to do date & time arithmetic with LINQ to Entities by default (Alex James told me on Twitter a while back that this was purely because of time constraints, not because they are evil or something :) ). However, you get canonical ESQL functions that are provider independent (so instead of using SqlServer.DATEADD above, you’d use AddMonths). If you really want to use LINQ to Entities (which is not surprising at all), you can create ESQL snippets in your model (“Model Defined Functions”) and then create annotated method stubs which Entity Framework will recognise when you use them inside a LINQ to Entities query expression tree. For more information see this post on the Entity Framework Design blog, and this MSDN article.

EDIT: Diego Vega kindly pointed out to me on Twitter that, in fact, Entity Framework 4 comes with some methods you can mix into your LINQ to Entities queries to call on EF v4’s new canonical date & time ESQL functions. Those methods (as well as a bunch of others) can be found in the EntityFunctions class as static methods. Thanks Diego!