Only showing posts tagged with "ASP.NET MVC"

Getting the Correct HTTP Status Codes out of ASP.NET Custom Error Pages

September 08, 2010 2:27 PM by Daniel Chambers

If you are using the default ASP.NET custom error pages, chances are your site is returning the incorrect HTTP status codes for the errors that your users are experiencing (hopefully as few as possible!). Sure, your users see a pretty error page just fine, but your users aren’t always flesh and blood. Search engine crawlers are also your users (in a sense), and they don’t care about the pretty pictures and funny one-liners on your error pages; they care about the HTTP status codes returned. For example, if a request for a page that was removed consistently returns a 404 status code, a search engine will remove it from its index. However, if it doesn’t and instead returns the wrong error code, the search engine may leave the page in its index.

This is what happens if your non-existent pages don't return the correct status code!

Unfortunately, ASP.NET custom error pages don’t return the correct error codes. Here’s your typical ASP.NET custom error page configuration that goes into the Web.config:

<customErrors mode="On" defaultRedirect="~/Pages/Error">

<error statusCode="403" redirect="~/Pages/Error403" />

<error statusCode="404" redirect="~/Pages/Error404" />

</customErrors>

And here’s a Fiddler trace of what happens when someone request a page that should simply return 404:

A trace of a request that should have just 404'd

As you can see, the request for /ThisPageDoesNotExist returned 302 and redirected the browser to the error page specified in the config (/Pages/Error404), but that page returned 200, which is the code for a successful request! Our human users wouldn’t notice a thing, as they’d see the error page displayed in their browser, but any search engine crawler would think that the page existed just fine because of the 200 status code! And this problem also occurs for other status codes, like 500 (Internal Server Error). Clearly, we have an issue here.

The first thing we need to do is stop the error pages from returning 200 and instead return the correct HTTP status code. This is easy to do. In the case of the 404 Not Found page, we can simply add this line in the view:

<% Response.StatusCode = (int)HttpStatusCode.NotFound; %>

We will need to do this to all views that handle errors (obviously changing the status code to the one appropriate for that particular error). This not only includes the pages you’ve configured in the customErrors element in the Web.config, but also any views you are using with ASP.NET MVC HandleError attributes (if you’re using ASP.NET MVC). After making these changes, our Fiddler trace looks like this:

A trace of a request that is 404ing, but still redirecting

We’ve now got the correct status code being returned, but there’s still an unnecessary redirect going on here. Why redirect when we can just return 404 and the error page HTML straight up? If we are using vanilla ASP.NET Forms, this is super easy to do with a quick configuration change; just set redirectMode to ResponseRewrite in the Web.config (this setting is new since .NET 3.5 SP1):

<customErrors mode="On" defaultRedirect="~/Error.aspx" redirectMode="ResponseRewrite">

<error statusCode="403" redirect="~/Error403.aspx" />

<error statusCode="404" redirect="~/Error404.aspx" />

</customErrors>

Unfortunately, this trick doesn’t work with ASP.NET MVC, as the method by which the response is rewritten using the specified error page doesn’t play nicely with MVC routes. One workaround is to use static HTML pages for your error pages; this sidesteps MVC routing, but also means you can’t use your master pages, making it a pretty crap solution. The easiest workaround I’ve found is to defenestrate ASP.NET custom errors and handle the errors manually through a bit of trickery in the Global.asax.

Firstly, we’ll handle the Error event in our Global.asax HttpApplication-derived class:

protected void Application_Error(object sender, EventArgs e)

{

if (Context.IsCustomErrorEnabled)

ShowCustomErrorPage(Server.GetLastError());

}

private void ShowCustomErrorPage(Exception exception)

{

HttpException httpException = exception as HttpException;

if (httpException == null)

httpException = new HttpException(500, "Internal Server Error", exception);

Response.Clear();

RouteData routeData = new RouteData();

routeData.Values.Add("controller", "Error");

routeData.Values.Add("fromAppErrorEvent", true);

switch (httpException.GetHttpCode())

{

case 403:

routeData.Values.Add("action", "AccessDenied");

break;

case 404:

routeData.Values.Add("action", "NotFound");

break;

case 500:

routeData.Values.Add("action", "ServerError");

break;

default:

routeData.Values.Add("action", "OtherHttpStatusCode");

routeData.Values.Add("httpStatusCode", httpException.GetHttpCode());

break;

}

Server.ClearError();

IController controller = new ErrorController();

controller.Execute(new RequestContext(new HttpContextWrapper(Context), routeData));

}

In Application_Error, we’re checking the setting in Web.config to see whether custom errors have been turned on or not. If they have been, we call ShowCustomErrorPage and pass in the exception. In ShowCustomErrorPage, we convert any non-HttpException into a 500-coded error (Internal Server Error). We then clear any existing response and set up some route values that we’ll be using to call into MVC controller-land later. Depending on which HTTP status code we’re dealing with (pulled from the HttpException), we target a different action method, and in the case that we’re dealing with an unexpected status code, we also pass across the status code as a route value (so that view can set the correct HTTP status code to use, etc). We then clear the error and new up our MVC controller, then execute it.

Now we’ll define that ErrorController that we used in the Global.asax.

public class ErrorController : Controller

{

[PreventDirectAccess]

public ActionResult ServerError()

{

return View("Error");

}

[PreventDirectAccess]

public ActionResult AccessDenied()

{

return View("Error403");

}

public ActionResult NotFound()

{

return View("Error404");

}

[PreventDirectAccess]

public ActionResult OtherHttpStatusCode(int httpStatusCode)

{

return View("GenericHttpError", httpStatusCode);

}

private class PreventDirectAccessAttribute : FilterAttribute, IAuthorizationFilter

{

public void OnAuthorization(AuthorizationContext filterContext)

{

object value = filterContext.RouteData.Values["fromAppErrorEvent"];

if (!(value is bool && (bool)value))

filterContext.Result = new ViewResult { ViewName = "Error404" };

}

}

This controller is pretty simple except for the PreventDirectAccessAttribute that we’re using there. This attribute is an IAuthorizationFilter which basically forces the use of the Error404 view if any of the action methods was called through a normal request (ie. if someone tried to browse to /Error/ServerError). This effectively hides the existence of the ErrorController. The attribute knows that the action method is being called through the error event in the Global.asax by looking for the “fromAppErrorEvent” route value that we explicitly set in ShowCustomErrorPage. This route value is not set by the normal routing rules and therefore is missing from a normal page request (ie. requests to /Error/ServerError etc).

In the Web.config, we can now delete most of the customErrors element; the only thing we keep is the mode switch, which still works thanks to the if condition we put in Application_Error.

<customErrors mode="On">

<!-- There is custom handling of errors in Global.asax -->

</customErrors>

If we now look at the Fiddler trace, we see the problem has been solved; the redirect is gone and the page correctly returns a 404 status code.

A trace showing the correct 404 behaviour

In conclusion, we’ve looked at a way to solve ASP.NET custom error pages returning incorrect HTTP status codes to the user. For ASP.NET Forms users the solution was easy, but for ASP.NET MVC users some extra manual work needed to be done. These fixes ensure that search engines that trawl your website don’t treat any error pages they encounter (such as a 404 Page Not Found error page) as actual pages.

Deep Inside ASP.NET MVC 2 Model Metadata and Validation

April 14, 2010 5:33 PM by Daniel Chambers (last modified on April 14, 2010 6:15 PM)

One of the big ticket features of ASP.NET MVC 2 is its ability to perform model validation at the model binding stage in the controller. It also has the ability to store metadata about your model itself (such as what properties are required ones, etc) and therefore can be more intelligent when it automatically renders an HTML interface for your data model.

Unfortunately, the way ASP.NET MVC 2 handles validation (in the controller) is at odds with my preferred way to handle validation (in the business layer, with validation errors batched and thrown up as exceptions back to the controller. I used xVal). In order to understand ASP.NET MVC 2’s validation I wanted to know how it worked, not just how to use it. Unfortunately, at time of writing, there seemed to be very few sources of information that described the way MVC 2’s model metadata and validation worked at a high, architectural level. The ASP.NET MVC MSDN class documentation is pretty horrible (it’s anaemic, in terms of detail). Sure, there are blog articles on how to use it, but none (that I could find, anyway) on how it really all works together under the covers. And if you don’t know how it works, it can be quite difficult to know how to use it most effectively.

To resolve this lack of documentation, this blog post attempts to explain the architecture of the ASP.NET MVC 2 metadata and validation features and to show you, at a high level, how they work. As I am not a member of the MVC team at Microsoft, I don’t have access to any architecture documentation they may have, so keep in mind everything in this blog was deduced by reading the MVC source code and judicious application of .NET Reflector. If I’ve made any mistakes, please let me know in the comments.

Model Metadata

ASP.NET MVC 2 has the ability to create metadata about your data model and use this for various tasks such as validation and special rendering. Some examples of the metadata that it stores are whether a property on a data model class is required, whether it’s read only, and what its display name is. As an example, the “is required” metadata would be useful as it would allow you to automatically write HTML and JavaScript to ensure that the user enters a value in a textbox, and that the textbox’s label is appended with an asterisk (*) so that the user knows that it is a required field.

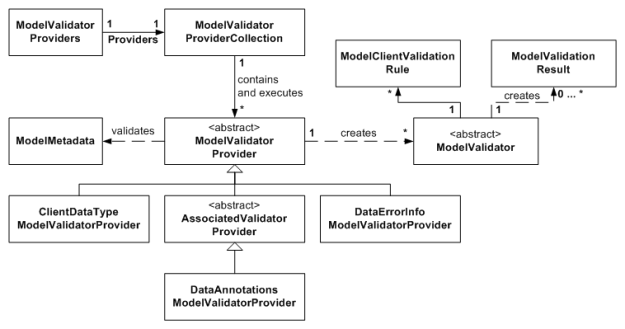

Figure 1. ModelMetadata and Providers Class Diagram

The ASP.NET MVC Model Metadata system stores metadata across many instances of the ModelMetadata class (and subclasses). ModelMetadata is a recursive data structure. The root ModelMetadata instance stores the metadata for the root data model object type, and also stores references to a ModelMetadata instance for each property on that root data model type. Figure 1 shows visual example of this for an example “Book” data model class.

Figure 2. Model Metadata Recursive Data Structure Diagram (note that not all properties on ModelMetadata are shown)

As you can see in Figure 2, there is a root ModelMetadata that describes the Book instance. You’ll notice that its ContainerType and PropertyName properties are null, which indicates that this is the root ModelMetadata instance. This root ModelMetadata instance has a Properties property that provides lazy-loaded access to ModelMetadata instances for each of the Book type’s properties. These ModelMetadata instances do have ContainerType and PropertyName set, because since they are for properties, they have a container type (ie. Book) and a name (ie. Title).

So how do we get instances of ModelMetadata for a data model, and how is the model’s metadata divined in the first place? This is where ModelMetadataProvider classes come in (see Figure 1). ModelMetadataProvider classes are able to create ModelMetadata instances. However, ModelDataMetadataProvider itself it is an abstract class, and delegates all its functionality to subclasses. The DataAnnotationsModelMetadataProvider is a implementation that is able to create model metadata based off the attributes in the System.ComponentModel.DataAnnotations namespace that are applied to data model classes and their properties. For example, the RequiredAttribute is used to determine whether a property is required or not (ie. it causes the ModelMetadata.IsRequired property to be set).

You’ll notice that the DataAnnotationsModelMetadataProvider does not inherit directly from ModelMetadataProvider (see Figure 1). Instead, it inherits from the AssociatedMetadataProvider abstract class. The AssociatedMetadataProvider class actually performs the getting of the attributes off the class and its properties by using an ICustomTypeDescriptor behind the scenes (which turns out to be the internal AssociatedMetadataTypeTypeDescriptor class, which is constructed by the AssociatedMetadataTypeTypeDescriptionProvider class). The AssociatedMetadataProvider then delegates the actual responsibility of building a ModelMetadata instance using the attributes it found to a subclass. The DataAnnotationsModelMetadataProvider inherits this responsibility.

As you can see, the whole metadata-creation system is very modular and allows you to generate model metadata in any way you want by just using a different ModelMetadataProvider class. The Provider class that the system uses by default is set by creating an instance of it and putting it in the static property ModelMetadataProviders.Current. By default, an instance of DataAnnotationsModelMetadataProvider is used.

Model Validation

ASP.NET MVC 2 includes a very modular and powerful validation system. Unfortunately, it’s very coupled to ASP.NET MVC as it uses ControllerContexts throughout itself. This means you can’t move its use into your business layer; you have to keep it in the controller. The modularity and power comes with a trade-off: architectural complexity. The validation system architecture contains quite a lot of indirection, but when you step through it you can see the reasons why the indirection exists: for extensibility.

Figure 3 shows an overview of the validation system architecture. Key to the system are ModelValidators, which when executed, are able to validate a part of the model in a specific way and return ModelValidationResults, which describe any validation errors that occurred with a simple text message. ModelValidators also have a collection of ModelClientValidationRules, which are simple data structures that represent the client-side JavaScript validation rules to be used for that ModelValidator.

ModelValidators are created by ModelValidatorProvider classes when they are asked to validate a particular ModelMetadata object. Many ModelValidatorProviders can be used at the same time to provide validation (ie. return ModelValidators) on a single ModelMetadata. This is illustrated by the ModelValidatorProviders.Providers static property, which is of type ModelValidatorProviderCollection. This collection, by default, holds instances of the ClientDataTypeModelValidatorProvider, DataAnnotationsModelValidatorProvider and DataErrorInfoModelValidatorProvider classes. This collection has a method (GetValidators) that allows you to easily execute all contained ModelValidatorProviders and get all the returned ModelValidators back. In fact, this is what is called when you call ModelMetadata.GetValidators.

The DataAnnotationsModelValidatorProvider is able to create ModelValidators that validate a model held in a ModelMetadata object by looking at System.ComponentModel.DataAnnotations attributes that have been placed on the data model class and its properties. In a similar fashion to the DataAnnotationsModelMetadataProvider, the DataAnnotationsModelValidatorProvider leaves the actual retrieving of the attributes from the data types to its base class, the AssociatedValidatorProvider.

The ClientDataTypeModelValidator produces ModelValidators that don’t actually do any server-side validation. Instead, the ModelValidators that it produces provide the ModelClientValidationRules needed to enforce data typing (such as numeric types like int) on the client. Obviously no server-side validation is needed to be done for data types since C# is a strongly-typed language and only an int can be in an int property. On the client-side, it is quite possible for the user to type a string (ie “abc”) into a textbox that requires an int, hence the need for special ModelClientValidationRules to deal with this.

The DataErrorInfoModelValidatorProvider is able to create ModelValidators that validate a data model that implements the IDataErrorInfo interface.

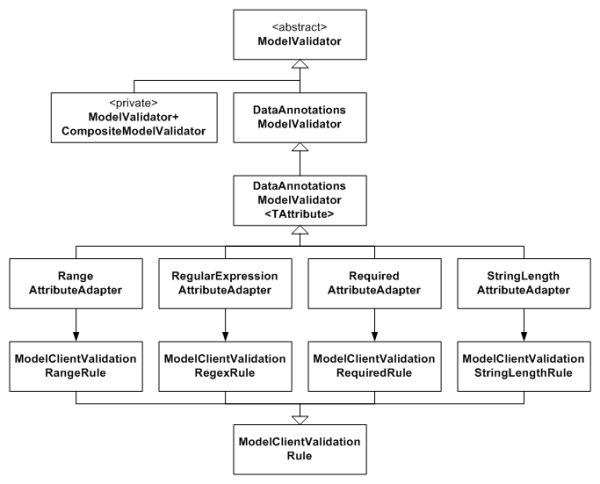

You may have noticed that ModelValidator is an abstract class. ModelValidator delegates the actual validation functionality to its subclasses, and it’s these concrete subclasses that the different ModelValidatorProviders create. Let’s take a deeper look at this, with a focus on the ModelValidators that the DataAnnotationsModelValidatorProvider creates.

As you can see in Figure 4, there is a ModelValidator concrete class for each System.ComponentModel.DataAnnotations validation attribute (ie. RangeAttributeAdapter is for the RangeAttribute). The DataAnnotationsModelValidator provides the general ability to execute a ValidationAttribute. The generic DataAnnotationsModelValidator<TAttribute> simply allows subclasses to access the concrete ValidationAttribute that the ModelValidator is for through a type-safe property.

You’ll notice in Figure 4 how each DataAnnotationsModelValidator<TAttribute> subclass has a matching ModelClientValidationRule. Each of those subclasses (ie. ModelClientValidationRangeRule) represents the client-side version of the ModelValidator. In short, they contain the rule name and whatever data needs to go along with it (for example, for the ModelClientValidationStringLengthRule, the specific string length is set inside that object instance). These rules can be serialised out to the page so that the page’s JavaScript validation logic can hook up client-side validation. (See Phil Haack’s blog on this for more information).

The DataAnnotationsModelValidatorProvider implements an extra layer of indirection that allows you to get it to create custom ModelValidators for any custom ValidationAttributes you might choose to create and use on your data model. Internally it has a Dictionary<Type, DataAnnotationsModelValidationFactory>, where the Type of a ValidationAttribute is associated with a DataAnnotationsModelValidationFactory (a delegate) that can create a ModelValidator for the attribute type it is associated with. You can add to this dictionary by calling the static DataAnnotationsModelValidatorProvider.RegisterAdapterFactory method.

The CompositeModelValidator is a private inner class of ModelValidator and is returned when you call the static ModelValidator.GetModelValidator method. It is a special ModelValidator that is able to take a ModelMetadata class, validate it by using its GetValidators method, and validate its properties by calling GetValidators on each of the ModelMetadata instances returned for each model property. It collects all the ModelValidationResult objects returned by all the ModelValidators that it executes and returns them in one big bundle.

Putting Metadata and Validation to Use

Now that we’re familiar with the workings of the ASP.NET MVC 2 Metadata and Validation systems, let’s see how we can actually use them to validate some data. Note that you don’t need to actually cause validation to happen explicitly, as ASP.NET MVC will trigger it to happen during model binding. This section just shows how the massive system we’ve looked at is kicked into execution.

The Controller.TryValidateModel method is a good example of showing model metadata creation and model validation in action:

protected internal bool TryValidateModel(object model, string prefix)

{

if (model == null)

throw new ArgumentNullException("model");

ModelMetadata modelMetadata = ModelMetadataProviders.Current.GetMetadataForType(() => model, model.GetType());

ModelValidator compositeValidator = ModelValidator.GetModelValidator(modelMetadata, base.ControllerContext);

foreach (ModelValidationResult result in compositeValidator.Validate(null))

{

this.ModelState.AddModelError(DefaultModelBinder.CreateSubPropertyName(prefix, result.MemberName), result.Message);

}

return this.ModelState.IsValid;

}

The first thing we do in the above method is create the ModelMetadata for the root object in our model (which is the model object passed in as a method parameter). We then get a CompositeModelValidator that will internally get and execute all the ModelValidators for the validation of the root object type and all its properties. Finally, we loop over all the validation errors gotten from executing the CompositeModelValidator (which executes all the ModelValidators it fetched internally) and add each error to the controller’s ModelState.

The CompositeModelValidator really hides the actual work of getting and executing the ModelValidators from us. So let’s see how it works internally.

public override IEnumerable<ModelValidationResult> Validate(object container)

{

bool propertiesValid = true;

foreach (ModelMetadata propertyMetadata in Metadata.Properties)

{

foreach (ModelValidator propertyValidator in propertyMetadata.GetValidators(ControllerContext))

{

foreach (ModelValidationResult propertyResult in propertyValidator.Validate(Metadata.Model))

{

propertiesValid = false;

yield return new ModelValidationResult

{

MemberName = DefaultModelBinder.CreateSubPropertyName(propertyMetadata.PropertyName, propertyResult.MemberName),

Message = propertyResult.Message

};

}

}

}

if (propertiesValid)

{

foreach (ModelValidator typeValidator in Metadata.GetValidators(ControllerContext))

foreach (ModelValidationResult typeResult in typeValidator.Validate(container))

yield return typeResult;

}

}

First it validates all the properties on the object that is held by the ModelMetadata it was created with (the Metadata property). It does this by getting the ModelMetadata of each property, getting the ModelValidators for that ModelMetadata, then executing each ModelValidator and yield returning the ModelValidationResults (if any; there will be none if there were no validation errors).

Then, if the all the properties were valid (if there were no ModelValidationResults returned from any of the ModelValidators) it proceeds to validate the root ModelMetadata by getting its ModelValidators, executing each one, and yield returning any ModelValidationResults.

The ModelMetadata.GetValidators method that the CompositeModelValidator is using simply asks the default ModelValidatorProviderCollection to execute all its ModelValidatorProviders and returns all the created ModelValidators:

public virtual IEnumerable<ModelValidator> GetValidators(ControllerContext context)

{

return ModelValidatorProviders.Providers.GetValidators(this, context);

}

Peanut-Gallery Criticism

The only criticism I have is levelled against the Model Validation system that for some reason requires a ControllerContext to be passed around internally (see the ModelValidator constructor). As far as I can see, that ControllerContext is never actually used by any out of the box validation component, which leads me to question why it needs to exist. The reason I dislike it is because it prevents me from even considering using the validation system down in the business layer (as opposed to in the controller) without coupling the business layer to ASP.NET MVC. However, there may be a good reason as to why it exists that I am not privy to.

Conclusion

In this blog post we’ve addressed the problem of a serious lack of documentation that explains the inner workings and architecture of the ASP.NET MVC 2 Model Metadata and Validation systems (that existed at least at the time of writing). We’ve systematically deconstructed and looked at the architecture of the two systems and seen how the architecture enables you to easily generate metadata about your data model and validate it, and how it provides the flexibility to plug in, change and extend the basic functionality to meet your specific use case.

DigitallyCreated Utilities v1.0.0 Released

March 24, 2010 2:43 PM by Daniel Chambers (last modified on June 07, 2010 3:35 PM)

After a hell of a lot of work, I am happy to announce that the 1.0.0 version of DigitallyCreated Utilities has been released! DigitallyCreated Utilities is a collection of many neat reusable utilities for lots of different .NET technologies that I’ve developed over time and personally use on this website, as well as on others I have a hand in developing. It’s a fully open source project, licenced under the Ms-PL licence, which means you can pretty much use it wherever you want and do whatever you want to it. No viral licences here.

The main reason that it has taken me so long to release this version is because I’ve been working hard to get all the wiki documentation on CodePlex up to scratch. After all, two of the project values are:

- To provide fully XML-documented source code

- To back up the source code documentation with useful tutorial articles that help developers use this project

And truly, nothing is more frustrating than code with bad documentation. To me, bad documentation is the lack of a unifying tutorial that shows the functionality in action, and the lack of decent XML documentation on the code. Sorry, XMLdoc that’s autogenerated by tools like GhostDoc, and never added to by the author, just doesn’t cut it. If you can auto-generate the documentation from the method and parameter names, it’s obviously not providing any extra value above and beyond what was already there without it!

So what does DCU v1.0.0 bring to the table? A hell of a lot actually, though you may not need all of it for every project. Here’s the feature list grouped by broad technology area:

- ASP.NET MVC and LINQ

- Sorting and paging of data in a table made easy by HtmlHelpers and LINQ extensions (see tutorial)

- ASP.NET MVC

- HtmlHelpers

- TempInfoBox - A temporary "action performed" box that displays to the user for 5 seconds then fades out (see tutorial)

- CollapsibleFieldset - A fieldset that collapses and expands when you click the legend (see tutorial)

- Gravatar - Renders an img tag for a Gravatar (see tutorial)

- CheckboxStandard & BoolBinder - Renders a normal checkbox without MVC's normal hidden field (see tutorial)

- EncodeAndInsertBrsAndLinks - Great for the display of user input, this will insert <br/>s for newlines and <a> tags for URLs and escape all HTML (see tutorial)

- IncomingRequestRouteConstraint - Great for supporting old permalink URLs using ASP.NET routing (see tutorial)

- Improved JsonResult - Replaces ASP.NET MVC's JsonResult with one that lets you specify JavaScriptConverters (see tutorial)

- Permanently Redirect ActionResults - Redirect users with 301 (Moved Permanently) HTTP status codes (see tutorial)

- Miscellaneous Route Helpers - For example, RouteToCurrentPage (see tutorial)

- HtmlHelpers

- LINQ

- MatchUp & Federator LINQ methods - Great for doing diffs on sequences (see tutorial)

- Entity Framework

- CompiledQueryReplicator - Manage your compiled queries such that you don't accidentally bake in the wrong MergeOption and create a difficult to discover bug (see tutorial)

- Miscellaneous Entity Framework Utilities - For example, ClearNonScalarProperties and Setting Entity Properties to Modified State (see tutorial)

- Error Reporting

- Easily wire up some simple classes and have your application email you detailed exception and error object dumps (see tutorial)

- Concurrent Programming

- Unity & WCF

- WCF Client Injection Extension - Easily use dependency injection to transparently inject WCF clients using Unity (see tutorial)

- Miscellaneous Base Class Library Utilities

- SafeUsingBlock and DisposableWrapper - Work with IDisposables in an easier fashion and avoid the bug where using blocks can silently swallow exceptions (see tutorial)

- Time Utilities - For example, TimeSpan To Ago String, TzId -> Windows TimeZoneInfo (see tutorial)

- Miscellaneous Utilities - Collection Add/RemoveAll, Base64StreamReader, AggregateException (see tutorial)

DCU is split across six different assemblies so that developers can pick and choose the stuff they want and not take unnecessary dependencies if they don’t have to. This means if you don’t use Unity in your application, you don’t need to take a dependency on Unity just to use the Error Reporting functionality.

I’m really pleased about this release as it’s the culmination of rather a lot of work on my part that I think will help other developers write their applications more easily. I’m already using it here on DigitallyCreated in many many places; for example the Error Reporting code tells me when this site crashes (and has been invaluable so far), the CompiledQueryReplicator helps me use compiled queries effectively on the back-end, and the ReaderWriterLock is used behind the scenes for the Twitter feed on the front page.

I hope you enjoy this release and find some use for it in your work or play activities. You can download it here.

DigitallyCreated v4.0 Launched

February 14, 2010 3:03 PM by Daniel Chambers (last modified on February 14, 2010 3:33 PM)

After over a month of work (in between replaying Mass Effect and my part-time work at Onset), I’ve finally finished the first version of DigitallyCreated v4.0. DigitallyCreated has been my website for many many years and has seen three major revisions before this one. However, those revisions were only HTML layout revisions; v3.0 upgraded the site to use a div-based CSS layout instead of a table layout, and v2.0 was so long ago I don’t even remember what it upgraded over v1.0.

Version 4 is DigitallyCreated’s biggest change since its very first version. It has received a brand new look and feel, and instead of being static HTML pages with a touch of 2 second hacked-up PHP thrown in, v4.0 is written from the ground up using C#, .NET Framework 3.5 SP1, and ASP.NET MVC. Obviously, there’s no point re-inventing the wheel (ignoring the irony that this is a custom-made blog site), so DigitallyCreated use a few open-source libraries (.NET ones and JavaScript ones) in its makeup:

- DigitallyCreated Utilities – a few libraries full of lots of little utilities ranging from MVC HtmlHelpers, to DateTime and TimeZoneInfo utilities, to Entity Framework utilities and LINQ extensions, to a pretty error and exception emailing utility.

- DotNetOpenAuth – a library that implements the OpenID standard, used for user authentication

- XML-RPC.NET – a library that allows you to create XML-RPC web services (since WCF doesn’t natively support it)

- Unity Application Block – a Microsoft open-source dependency injection library (used to decouple the business and UI layers)

- xVal – a library that enables declarative, attribute-based, server-side & client-side form validation

- jQuery – the popular JavaScript library, without which JavaScript development is like pulling teeth without anaesthetic

- jQuery.Validate – an extension to jQuery that makes client-side form validation easier (used by xVal)

- openid-selector (modified) – the JavaScript that powers the pretty logos on the OpenID sign in page

- qTip – a jQuery extension that makes doing rich tooltips easier

- IE6Update – a JavaScript library that encourages viewers still on IE6 to upgrade to a browser that doesn’t make baby Jesus cry

- SyntaxHighlighter – a JavaScript library that handles the syntax highlighting of any code snippets I use

So what are some of the cool features that make v4.0 so much better? Firstly, I’ve finally got a real blogging system. Using an implementation of the MetaWeblog API (plus some small extras) I can use Windows Live Writer (which is awesome, FYI) to write my blogs and post them up to website. The site automatically generates RSS feeds for almost everything to do with blogs. You can get an RSS feed for all blogs, for all blogs in a particular category or tag, for all comments, for comments on a blog, or for all comments on all blogs in a particular category or tag. Thanks to ASP.NET MVC, that was really easy to do, since most of the feeds are simply a different “view” of the blog data. And finally, the blog system now allows people to comment on blog posts, a long needed feature.

The new blog system makes it so much more convenient for me to blog now. Previously, posting a blog was a chore: write the blog, put the HTML into a new file, tell the hacky PHP rubbish code about the new file (increment the total number of posts), manually update the RSS feed XML, and then upload all the changes via FTP. Now I just write the post in Live Writer and click publish.

The URLs that the blog post use are now slightly search engine optimised (ie. they use a slug, that effectively puts the post title in the URL). I’ve made sure that the old permalinks to blog posts still work for the new site. If you use an old URL, you’ll get permanently redirected to the new URL. Permalinks are a promise. :)

Other than the big blog system, there are some other cool things on the site. One is the fact you can sign in with your OpenID. Doing your own authentication is so 2006 :). From the user’s perspective it means that they don’t need to sign up for a new account just to comment on the blog posts. They can simply log in with their existing OpenID, which could be their Google or Yahoo account (or soon their Windows Live ID). If their OpenID provider supports it (and the user allows it), the site pulls some of their details from their OpenID, like their nickname, email, website, and timezone, so they don’t have to enter their details manually. I use the user’s email address to pull and display their Gravatar when they submit a comment. All the dates and times on the site are adjusted to match the logged in user’s time zone.

Another good thing that is that home page is no longer a waste of space. In v3.0, the home page displayed some out of date website news and other old information. Because uploading blog posts was a manual process, I couldn’t be bothered putting blog snippets on the home page, since that would have meant me doing it manually for every blog post. In v4.0, the site does it for me. It also runs a background thread, polls my Twitter feed, and pulls my tweets for display.

I’ve also trimmed a lot of content. The old DigitallyCreated had a lot of content that was ancient, never updated, and read like it had been written by a 15 year old (it had been!). I cut all that stuff. However, I kept the resume page, and jazzed it up with a bit of JavaScript so that it’s slish and sexy. It no longer shows you my life history back into high school by default. Instead, it shows you what you care about: the most recent stuff. The older things are still there, but are hidden by default and can be shown by clicking “show me more”-style JavaScript links.

Behind the scenes there’s an error reporting system that catches crashes and emails nice pretty exception object dumps to me, so that I know what went wrong, what page it occurred on, and when it happened so that I can fix it. And of course, how can we forget funny error pages.

Of course, all this new stuff is just the beginning of the improvements I’m making to the site. There are more features that I wanted to add, but I had to draw the release line somewhere, so I drew it here. In the future, I’m looking at:

- Not displaying the full blog posts in the blog post lists. Instead, I’ll have a “show me more”-style link that shows the rest of the post when you click it.

- A right-hand panel on the blog pages which will contain, in particular, a list of categories and a coloured tag cloud. Currently, the only way to browse tags and categories is to see one used on a post and to click it. That’s rubbish discoverability.

- “Reply to” in comments, so that people can reply to other comments. I may also consider a peer reviewed comment system where you can “thumbs-up” and “thumbs-down” comments.

- The ability to associate multiple OpenIDs with the same account here at DigitallyCreated. This could be useful for people who want to change their OpenID, or use a new one.

- A full file upload system, which can only be used by myself for when I want to upload a file for a friend to download that’s too big for email (like a large installer or something). The files will be able to be secured so that only particular OpenIDs can download them.

- An in-browser blog editing UI, so that I’m not tied to using Windows Live Writer to write blogs.

- Blog search. I might fiddle with my own implementation for fun, but search is hard, so I may end up delegating to Google or Bing.

In terms of deployment, I’ve had quite the saga, which is the main reason why the site is late in coming. Originally, I was looking at hosting at either WebHost4Life or DiscountASP.NET. DiscountASP.NET looked totally pro and seemed like it was targeted at developers, unlike a lot of other hosts that target your mum. However, WebHost4Life seemed to provide similar features with more bandwidth and disk space for half the price. Their old site had a few awards on it, which lent it credibility to me (they’ve since changed it and those awards have disappeared). So I went with them over DiscountASP.NET. Unfortunately, their services never worked correctly. I had to talk to support to get signed up, since I didn’t want to transfer my domain over to them (a common need, surely!). I had to talk to support to get FTP working. I had to talk to support when the file manager control panel didn’t work. The last straw for me was when I deployed DigitallyCreated, and I got an AccessViolationException complaining that I probably had corrupt memory. After talking to support, they fixed it for me and declared the site operational. Of course, they didn’t bother to go past the front page (and ignored the broken Twitter feed). All pages other than the front page were broken (seemed like the ASP.NET MVC URL routing wasn’t happening). I talked to support again and they spent the week making it worse, and at the end the front page didn’t even load any more.

So instead I discovered WinHost, who offer better features than WebHost4Life (though with less bandwidth and disk space), but for half the price and without requiring any yearly contract! I signed up with them today, and within a few hours, DigitallyCreated was up and running perfectly. Wonderful! They even offer IIS Manager access to IIS and SQL Server Management Studio access to the database. Thankfully, I risked WebHost4Life knowing that they have a 30-day money back guarantee, so providing they don’t screw me, I’ll get my money back from them.

Now that the v4.0 is up and running, I will start blogging again. I’ve got some good topics and technologies that I’ve been saving throughout my hiatus in January to blog about for when the site went live. DigitallyCreated v4.0 has been a long time coming, and I hope you like it, and now, thanks to comments, you can tell me how much it sucks!

ASP.NET MVC Model Binders + RegExs + LINQ == Awesome

December 10, 2009 2:00 PM by Daniel Chambers

I was working on some ASP.NET MVC code today and I created this neat little solution that uses a custom model binder to automatically read in a bunch of dynamically created form fields and project their data into a set of business entities which were returned by the model binder as a parameter to an action method. The model binder isn't particularly complex, but the way I used regular expressions and LINQ to identify and collate the fields I needed to create the list of entities from was really neat and cool.

I wrote a Javascript-powered form (using jQuery) that allowed the user to add and edit multiple items at the same time, and then submit the set of items in a batch to the server for a save. The entity these items represented looks like this (simplified):

public class CostRangeItem

{

public short VolumeUpperBound { get; set; }

public decimal Cost { get; set; }

}

The Javascript code, which I won't go into here as it's not particularly cool (just a bit of jQuery magic), creates form items that look like this:

<input id="VolumeUpperBound:0" name="VolumeUpperBound:0" type="text /> <input id="Cost:0" name="Cost:0" type="text" />

The multiple items are handled by adding more form fields and incrementing the number after the ":" in the id/name. So having fields for two items means you end up with this:

<input id="VolumeUpperBound:0" name="VolumeUpperBound:0" type="text /> <input id="Cost:0" name="Cost:0" type="text" /> <input id="VolumeUpperBound:1" name="VolumeUpperBound:1" type="text /> <input id="Cost:1" name="Cost:1" type="text" />

I chose to use the weird ":n" format instead of a more obvious "[n]" format (like arrays) since using "[" is not valid in an ID attribute and you end up with a page that doesn't validate.

I created a model binder class that looked like this:

private class CostRangeItemBinder : IModelBinder

{

public object BindModel(ControllerContext controllerContext, ModelBindingContext bindingContext)

{

...

}

}

To use the model binder on an action method, you use the ModelBinderAttribute to specify the type of model binder you want ASP.NET MVC to bind the parameter with:

public ActionResult Create([ModelBinder(typeof(CostRangeItemBinder))] IList<CostRangeItem> costRangeItems)

{

...

}

The first thing I did was create two regular expressions so that I could identify which fields I needed to read in (the VolumeUpperBound ones and the Cost ones). I chose to use regular expressions because they're much simpler to write (once you learn them!) than some manual string hacking code, and I could use them to not only identify which fields I needed to read, but also to extract the number after the ":" so I could link up the appropriate VolumeUpperBound field with the appropriate Cost field to create a CostRangeItem object. These are the regular expressions I used:

private static readonly Regex _VolumeUpperBoundRegex = new Regex(@"^VolumeUpperBound:(\d+)$"); private static readonly Regex _CostRegex = new Regex(@"^Cost:(\d+)$");

Both regexs work like this: the ^ at the beginning and the $ at the end means that, in the matching string, there cannot be any characters before or after the characters specified in between those symbols. It effectively anchors the match to the beginning and the end of the string. Inside those symbols, each regex matches their field name and the ":" character. They then define a special "group" using the ()s. Using the group allows me to pull out the sub-part of the regex match defined by this group later on. The \d+ means match one or more digits (0-9), which matches the number after the ":".

Note that I've made the Regex objects static readonly members of my binder class. Keeping a single instance of the regex object, which is immutable, saves .NET from having to recompile a new Regex every time I bind, which I believe is a relatively expensive process.

Then, in the BindModel method, I first make sure that the model binder has been used on the correct type in the action method:

if (bindingContext.ModelType.IsAssignableFrom(typeof(List<CostRangeItem>)) == false)

return null;

I then use LINQ to perform matches against all the fields in the current page submit (via the BindingContext's ValueProvider IDictionary). I do this using a Select() call, which performs the match and puts the results into an anonymous class. I then filter out any fields that didn't match the regex using a Where() call.

var vubMatches = bindingContext.ValueProvider

.Select(kvp => new

{

Match = _VolumeUpperBoundRegex.Match(kvp.Key),

Value = kvp.Value.AttemptedValue

})

.Where(o => o.Match.Success);

var costMatches = bindingContext.ValueProvider

.Select(kvp => new

{

Match = _CostRegex.Match(kvp.Key),

Value = kvp.Value.AttemptedValue

})

.Where(o => o.Match.Success);

Now I need to link up VolumeUpperBound fields with their associated Cost fields. To do this, I do an inner equijoin using LINQ. I join on the result of that regex group that I created that extracts the number from the field name. This means I join VolumeUpperBound:0 with Cost:0, and VolumeUpperBound:1 with Cost:1 and so on.

List<CostRangeItem> costRangeItems =

(from vubMatch in vubMatches

join costMatch in costMatches

on vubMatch.Match.Groups[1].Value equals costMatch.Match.Groups[1].Value

where ValuesAreValid(vubMatch.Value, costMatch.Value, controllerContext)

select new CostRangeItem

{

Cost = Decimal.Parse(costMatch.Value),

VolumeUpperBound = Int16.Parse(vubMatch.Value)

}

).ToList();

return costRangeItems;

I then use a where clause to ensure the values submitted are actually of the correct type, since the Value member is actually a string at this point (I pass them into a ValuesAreValid method, which returns a bool). This method marks the ModelState with an error if it finds a problem. I then use Select to create a CostRangeItem per join and copy in the values from the form fields using an object initialiser. I can then finally return my List of CostRangeItem. The ASP.NET MVC framework ensures that this result is passed to the action method that declared that it wanted to use this model binder.

As you can see, the solution ended up being a neat declarative way of writing a model binder that can pull in and bind multiple objects from a dynamically created form. I didn't have to do any heavy lifting at all; regular expressions and LINQ handled all the munging of the data for me! Super cool stuff.

DigitallyCreated Utilities Now Open Source

August 05, 2009 2:00 PM by Daniel Chambers

My part time job for 2009 (while I study at university) has been working at a small company called Onset doing .NET development work. Among other things, I am (with a friend who also works there) re-writing their website in ASP.NET MVC. As I wrote code for their website I kept copy/pasting in code from previous projects and thinking about ways I could make development in ASP.NET MVC and Entity Framework even better.

I decided there needed to be a better way of keeping all this utility code that I kept importing from my personal projects into Onset's code base in one place. I also wanted a place that I could add further utilities as I wrote them and use them across all my projects, personal or commercial. So I took my utility code from my personal projects, implemented those cool ideas I had (on my time, not on Onset's!) and created the DigitallyCreated Utilities open source project on CodePlex. I've put the code out there under the Ms-PL licence, which basically lets you do anything with it.

The project is pretty small at the moment and only contains a handful of classes. However, it does contain some really cool functionality! The main feature at the moment is making it really really easy to do paging and sorting of data in ASP.NET MVC and Entity Framework. MVC doesn't come with any fancy controls, so you need to implement all the UI code for paging and sorting functionality yourself. I foresaw this becoming repetitive in my work on the Onset website, so I wrote a bunch of stuff that makes it ridiculously easy to do.

This is the part when I'd normally jump into some awesome code examples, but I already spent a chunk of time writing up a tutorial on the CodePlex wiki (which is really good by the way, and open source!), so go over there and check it out.

I'll be continuing to add to the project over time, so I thought I might need some "project values" to illustrate the quality level that I want the project to be at:

- To provide useful utilities and extensions to basic .NET functionality that saves developers' time

- To provide fully XML-documented source code (nothing is more annoying than undocumented code)

- To back up the source code documentation with useful tutorial articles that help developers use this project

That's not just guff: all the source code is fully documented and that tutorial I wrote is already up there. I hate open source projects that are badly documented; they have so much potential, but learning how they work and how to use them is always a pain in the arse. So I'm striving to not be like that.

The first release (v0.1.0) is already up there. I even learned how to use Sandcastle and Sandcastle Help File Builder to create a CHM copy of the API documentation for the release. So you can now view the XML documentation in its full MSDN-style glory when you download the release. The assemblies are accompanied by their XMLdoc files, so you get the documentation in Visual Studio as well.

Setting all this up took a bit of time, but I'm really happy with the result. I'm looking forward to adding more stuff to the project over time, although I might not have a lot of time to do so in the near future since uni is starting up again shortly. Hopefully you find what it has got as useful as I have.

ASP.NET MVC Compared to JSF

June 10, 2009 3:00 PM by Daniel Chambers

Updated on 2009/06/12 & 2009/06/13.

Today I'm going to do a little no-holds-barred comparison of ASP.NET MVC and JavaServer Faces (JSF) based on my impressions when working with the both of them. I worked with ASP.NET MVC in my Enterprise .NET university subject and with JSF in my Enterprise Java subject. Please note that this is in no way a scientific comparison of the two technologies and is simply my view of the matter based on my experiences.

ASP.NET MVC is the new extension to ASP.NET that allows you to write web pages using the Model View Controller pattern. Compared to the old ASP.NET Forms way of doing things, it's a breath of fresh air. JSF is one of the many Java-based web application frameworks that are available. It's basically an extension to JSP (JavaServer Pages), which is a page templating language similar to the way ASP.NET Forms works (except without the code-behind; so it's more like original ASP in that respect).

JSF Disadvantages

It is probably easiest if I start with what is wrong with JSF and what makes it a total pain in the arse to use (you can tell already which slant this post will take! :D)

Faces-Config.xml

In years past, the world went XML-crazy (this has lessened in recent years). Everybody was jumping aboard the XML train and putting everything they could into XML. The Faces-Config.xml is a result of this (in my opinion, anyway). It is basically one massive XML file where you define where every link on (almost) every page goes to. You also declare your "managed backing beans", which are just classes that the framework instantiates and manages the lifecycle (application, session, or per-request) of. In addition, you can declare extensions to JSF like custom validators, among other things.

All of this goes into the one XML file. As you can probably see, it soon becomes huge and unwieldy. Also, when working in a team, everybody is always editing this file and as such nasty merge conflicts often occur.

Page Navigation

In JSF, you need to define where all your links go in your faces-config.xml. You can do stuff like wildcards (which lets you describe where a link from any page goes) to help ease this pain, but it still sucks.

Not only that, but every page link in JSF is a POST->Redirect, GET->Response. Yes, that's right, every click is a form submit and redirect. Even from a normal page to the next. If you don't perform the redirect, JSF will still show you the next page, but it shows it under the previous page's URL (kind of like an ASP.NET Server.Transfer).

No Page Templating Support

It seems like a simple thing, but JSF just doesn't have any page templating support (called Master Pages in ASP.NET). This makes keeping a site layout consistent a real pain. We ended up resorting to using Dreamweaver Templates (basically just advanced copy & paste) to keep our site layout consistent. Yuck.

Awkward Communication Between Pages and Between Objects

In JSF, it is awkward and difficult to communicate data between pages. JSF doesn't really support URL query string parameters (since every link is a POST), so you end up having to create an object that sticks around for the user's session and then putting the data that you want on the next page in that, ready for the next page to get. Yuck. There are other approaches, but none of them are really very easy to do.

Communication between "managed backing beans" is awkward. You end up having to manually compile a JSP Expression Language statement to get at other backing beans. Check out this page to see all the awkward contortions you need to do to achieve anything.

ASP.NET MVC vs. JSF Disadvantages

So enough JSF bashing. It's all very well to beat on JSF, but can ASP.NET MVC do all those things that JSF can't do well? The answer is yes, yes it can.

Little XML Configuration

Other than the web.xml file, where you initialise a lot of ASP.NET MVC settings (like integration with IIS, Membership, Roles, Database connection strings, etc), ASP.NET MVC doesn't use any XML. The things that you do have to do in the web.xml are likely things you'll set up once and never touch again, and so are unlikely to cause messy merge conflicts in revision control. Even user authorisation for page access is not done in XML (unlike JSF, and for that matter, ASP.NET Forms); it's done with attributes in the code.

RESTful URLs and Page Navigation

ASP.NET MVC has a powerful URL routing engine that allows (and encourages) you to use RESTful URLs. When you create page links, ASP.NET MVC automatically creates the correct the URL to the particular action (on a controller) that you want to perform by doing a reverse lookup in the routing table. No double page requests here, and if you change what your URLs look like you don't need to update all your links, you just change the routing table.

Master Pages

ASP.NET MVC inherits the concept of Master Pages from ASP.NET Forms. Master pages let you easily define what every page has in common, then for each page, define only what varies. It's a powerful templating technology.

Easy Communication Between Pages

Communication between pages is easy in ASP.NET MVC since it fully supports getting data from both POST and GET (URL query string parameters). It also provides a neat "TempData" store that you can place things in that will be passed on to the next page loaded, and then are automatically destroyed. This is extremely useful for passing a message onto the next page for it to display an "operation performed" message after a form submit.

JSF Advantages

As much as I dislike JSF, it does have a few things going for it that ASP.NET MVC just doesn't have.

Multi-platform Support

One of the biggest criticisms of any .NET technology is that it only runs on Windows. And while I may be a big fan of Windows, when it comes to servers, other operating systems can be viable alternatives. JSF gives you the flexibility to choose what operating system you want to run your web application on.

Multiple Implementations and Multiple Web Application Server Support

JSF is a standard, so there are many different implementations of the standard. This means that you can pick and choose which particular implementation best suits your needs (in terms of performance, etc). This encourages competition and ultimately leads to better software.

JSF can run in many different application servers, which gives you the choice of which you'd like to use. Feeling cheap? Use Glassfish, Sun's free application server. Feeling rich and in need of features? Use IBM WebSphere. Feeling the need for speed and no need for those all enterprise technologies like EJBs? Crack out Tomcat.

The Java Ecosystem

Like it or not, Java has a massive following and open-source ecosystem. If you need something, it's probably been done before and is open-source and therefore you can get it for free (probably... if you're closed-source you need to watch out for GPLed software).

Free

JSF is free, Java is free, and you can run it all on free operating systems and application servers, you can get a JSF web application up and running for nothing (excluding programming labour, of course).

ASP.NET MVC vs. JSF Advantages

So how does ASP.NET MVC weigh up against the pros of JSF? Not too well.

Only Runs on Windows

ASP.NET MVC will only run on Windows, since it runs on the .NET Framework. You could argue that Mono exists for Linux, but the Mono .NET implementation is always behind Microsoft's implementation, so you can never get the latest technology (and ASP.NET MVC is very recent).

Only Runs in One Application Server

ASP.NET MVC runs in only one application server: IIS. If IIS doesn't do what you want, or doesn't perform like you need it to, too bad, you've got no choice but to use it.

Update: A friend told me about how you can actually run ASP.NET in Apache. However, that plugin (mod_aspdot) was retired from Apache due to lack of support. Its successor, mod_mono, allows you to run ASP.NET in Apache using Mono. However, at the time of writing I see multiple tests failing on their test page. I don't know how severe these bugs are, but it's certainly not the level of support you receive from Microsoft in IIS.

Decent Ecosystem

Most people probably would have assumed I would bash the .NET ecosystem for not being very open source and that is true, up to a point. However, in recent times the .NET ecosystem has been becoming more and more open-source. ASP.NET MVC itself is open source! All this said and done, Java is still far more open-source and free than .NET.

Not Really Free

Although you can download the .NET SDK for free, you really do need to purchase Visual Studio to be effective while developing for it. You'll also need to pay for Windows on which to run your web server.

ASP.NET MVC Other Advantages

Other than all the sweet stuff I mentioned above, what else does ASP.NET MVC have to offer?

Clean Code Design

ASP.NET MVC makes it very easy to write neat, clean and well designed code because of its use of the Model View Controller pattern. JSF is supposedly MVC, but I honestly couldn't tell how they were using it. My code in JSF was horrible and awkward. ASP.NET MVC is clearly MVC and benefits a lot from it. You can unit test your controllers easily, and your processing logic is kept well away from your views.

Support for Web 2.0 Technologies

Web 2.0 is a buzzword that represents (in my mind) the use of JavaScript and AJAX to make web pages more rich and dynamic. ASP.NET makes it pretty easy to support these technologies and even comes with fully supported and documented jQuery support. JSF doesn't come with any of this stuff ready and out of the box and due to its awkward page navigation system, makes it difficult to incorporate one of the existing technologies into it. The JSF way is most definitely not the Web 2.0 way.

Visual Studio

Although you have to pay for Visual Studio, it is an excellent IDE to work with ASP.NET MVC in. NetBeans, the IDE I used JSF in, was quite possibly one of the worst IDEs I have ever used. It was awkward to use, slow, unintuitive and buggy. Visual Studio has an inbuilt, lightweight web server that you can run your web applications on while developing. You just build your application and, bam, it's already automatically running. In NetBeans, you have to deploy the application (slow!) to GlassFish and sometimes it will bollocks it up somehow and you'll just get random uncaught exceptions that a clean and build and redeploy will somehow fix. That sort of annoying, time-wasting and confusing stuff just doesn't occur in Visual Studio. You really do get what you pay for.

In conclusion, I feel that ASP.NET MVC is a far better Web Application Framework than JSF. It makes it so easy to code neat and well-designed pages that generate modern Web 2.0-style pages. JSF just ends up getting in your way. While coding ASP.NET MVC, I kept going "oh wow, that's nice!", but while developing JSF I just swore horribly. And that really tells you something.

Update: I've been told that JSF 2.0 (as yet unreleased at the time of writing) fixes many of the problems I've mentioned above. So, it might be worth a re-evaluation once it is released.